publications

2025

- IEEE Perv. Comp.

Cross-Reality Lifestyle: Integrating Physical and Virtual Lives Through Multiplatform MetaverseYuichi Hiroi, Yuji Hatada, and Takefumi HirakiIEEE Pervasive Computing, 2025

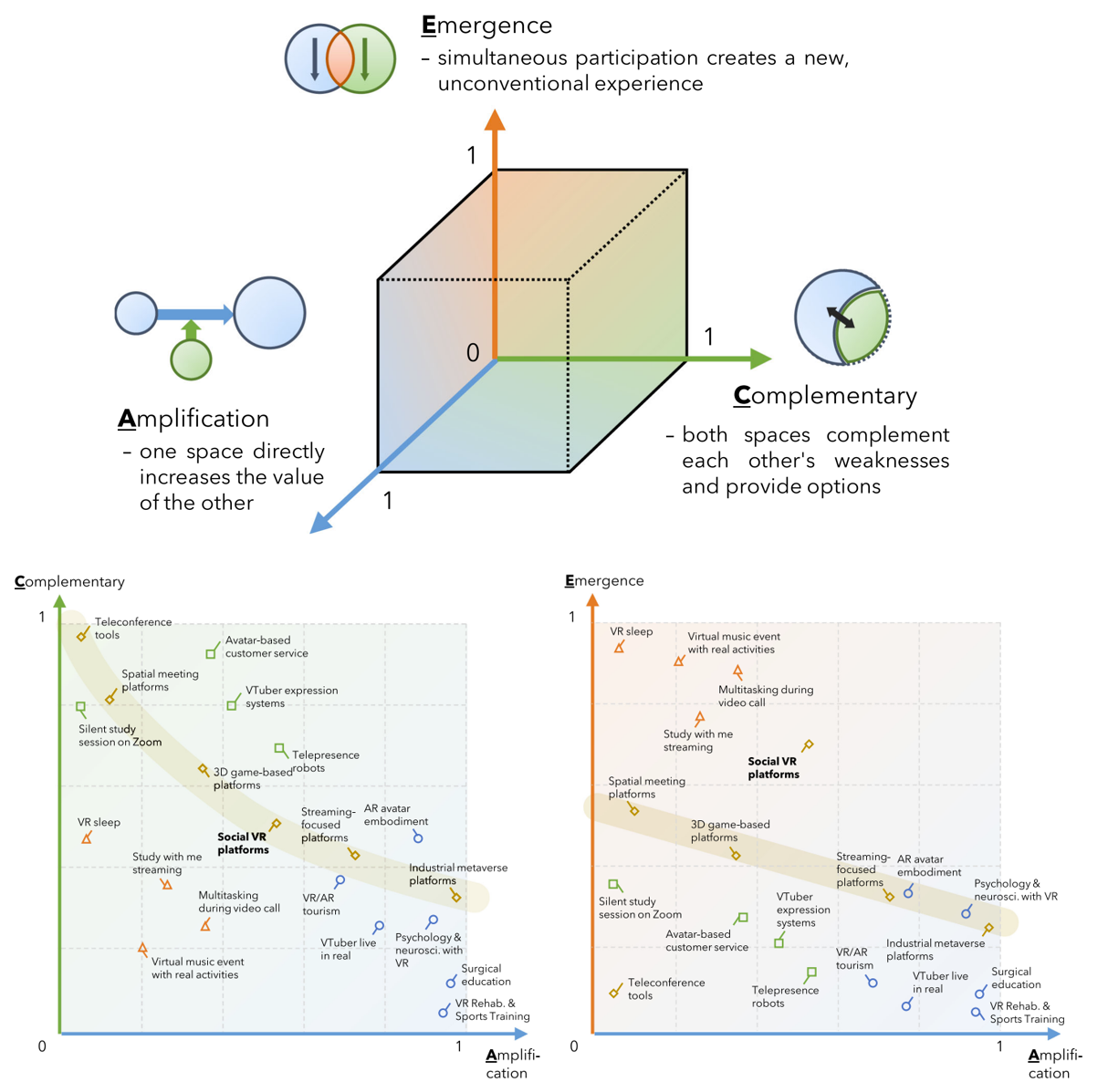

Cross-Reality Lifestyle: Integrating Physical and Virtual Lives Through Multiplatform MetaverseYuichi Hiroi, Yuji Hatada, and Takefumi HirakiIEEE Pervasive Computing, 2025Technological advances are redefining the relationship between physical and virtual spaces. Traditionally, when users engage in virtual reality, they are completely cutoff from the physical space. Similarly, they are unable to access virtual experiences while engaged in physical activities. However, modern multiplatform metaverse environments allow simultaneous participation through mobile devices, creating new opportunities for integrated experiences. This study introduces the concept of “cross-reality lifestyles” to examine how users actively combine their physical and virtual activities. We identify three patterns of integration: first, Amplification: one space enhances experiences in the other; second, Complementary: spaces offer different but equally valuable alternatives, and third, Emergence: simultaneous engagement creates entirely new experiences. We propose the ACE cube framework that analyzes these patterns as continuous characteristics, and by integrating this analysis with technical requirements of commercial platforms, we provide practical guidelines for platform selection, technical investment prioritization, and cross-reality application development.

@article{hiroi2025crossreality, author = {Hiroi, Yuichi and Hatada, Yuji and Hiraki, Takefumi}, journal = {IEEE Pervasive Computing}, title = {Cross-Reality Lifestyle: Integrating Physical and Virtual Lives Through Multiplatform Metaverse}, year = {2025}, volume = {}, number = {}, pages = {1-10}, keywords = {Metaverse;Avatars;Three-dimensional displays;Digital twins;Training;Smart phones;Robots;Pervasive computing;Technical requirements;Fans}, doi = {10.1109/MPRV.2025.3610749}, url = {https://doi.ieeecomputersociety.org/10.1109/MPRV.2025.3610749}, publisher = {IEEE Computer Society}, } - IEEE Perv. Comp.

MetaGadget: An Accessible Framework for IoT Integration Into Commercial Metaverse PlatformsRyutaro Kurai, Hikari Yanagawa, Yuichi Hiroi, and Takefumi HirakiIEEE Pervasive Computing, 2025

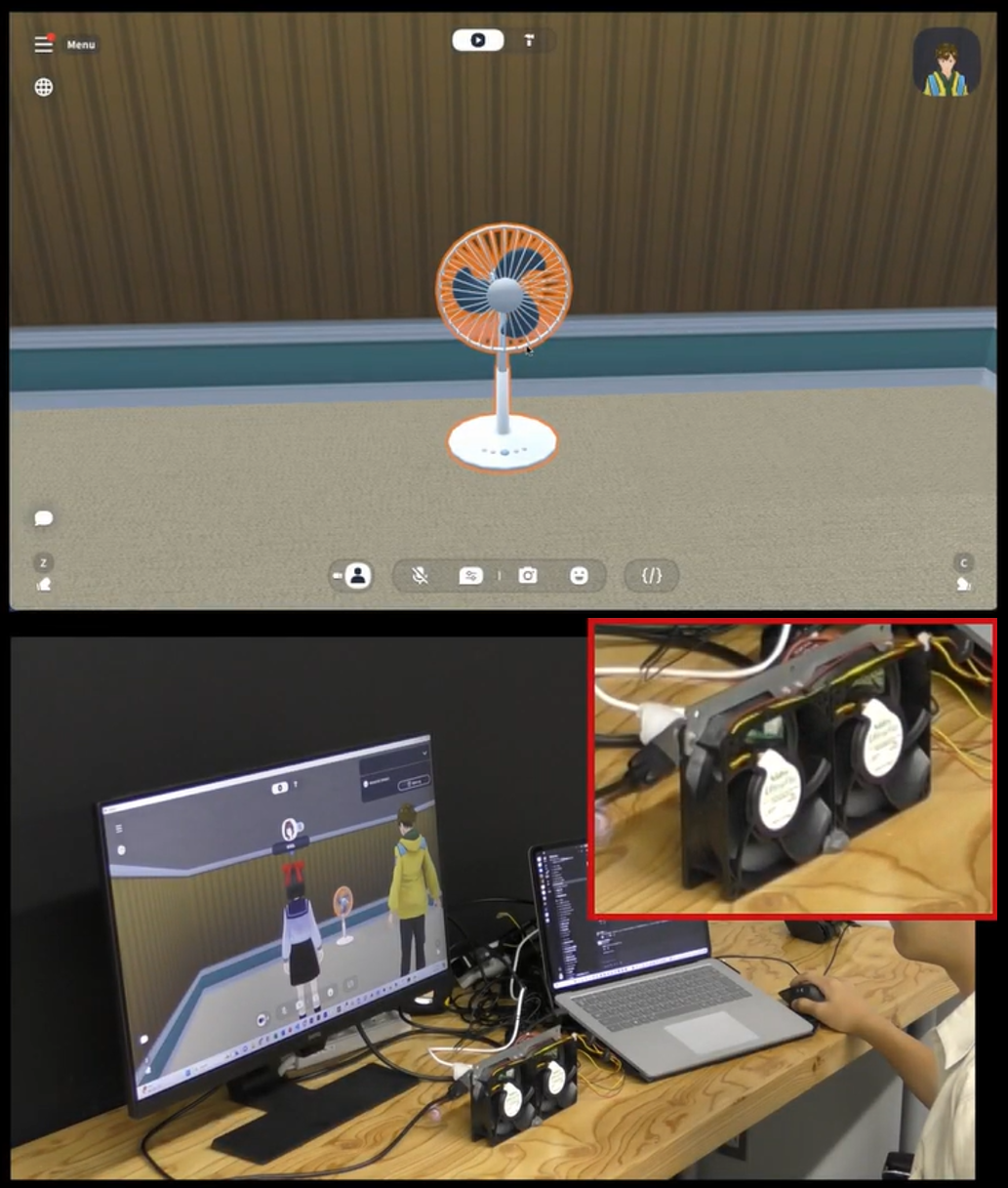

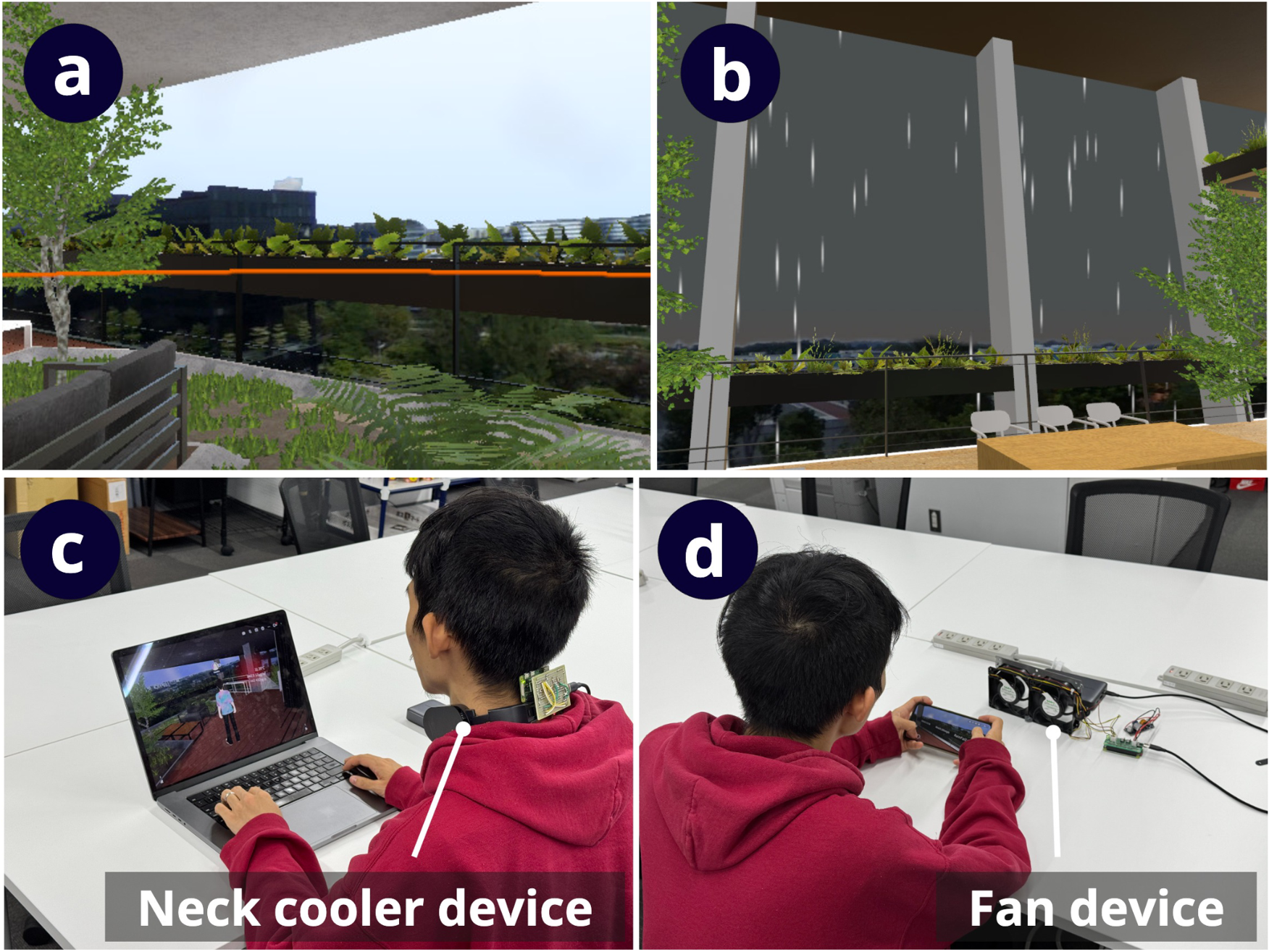

MetaGadget: An Accessible Framework for IoT Integration Into Commercial Metaverse PlatformsRyutaro Kurai, Hikari Yanagawa, Yuichi Hiroi, and Takefumi HirakiIEEE Pervasive Computing, 2025While the integration of Internet of Things (IoT) devices in virtual spaces is becoming increasingly common, technical barriers to controlling custom devices in multiuser virtual reality (VR) environments remain high, particularly limiting new applications in educational and prototyping settings. We propose MetaGadget, a framework for connecting IoT devices to commercial metaverse platforms that implements device control through HTTP-based event triggers without requiring persistent client connections. Through two workshops focused on smart home control and custom device integration, we explored the potential application of IoT connectivity in multiuser metaverse environments. Participants successfully implemented new interactions unique to the metaverse, such as environmental sensing and remote control systems that support simultaneous operation by multiple users, and reported positive feedback on the ease of system development. We verified that our framework provides a new approach to controlling IoT devices in the metaverse while reducing technical requirements and provides a foundation for creative practice that connects multiuser VR environments and physical spaces.

@article{kurai2025metagadget, author = {Kurai, Ryutaro and Yanagawa, Hikari and Hiroi, Yuichi and Hiraki, Takefumi}, journal = {IEEE Pervasive Computing}, title = {MetaGadget: An Accessible Framework for IoT Integration Into Commercial Metaverse Platforms}, year = {2025}, volume = {}, number = {}, pages = {1-11}, keywords = {Metaverse;Internet of Things;Servers;Switches;Conferences;Aerospace electronics;Fans;Control systems;HTTP;Digital twins}, doi = {10.1109/MPRV.2025.3602289}, } - IEEE Access

MagicCraft: Natural Language-Driven Generation of Dynamic and Interactive 3D Objects for Commercial Metaverse PlatformsRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquía-Hernández, Hideaki Uchiyama, and Kiyoshi KiyokawaIEEE Access, 2025

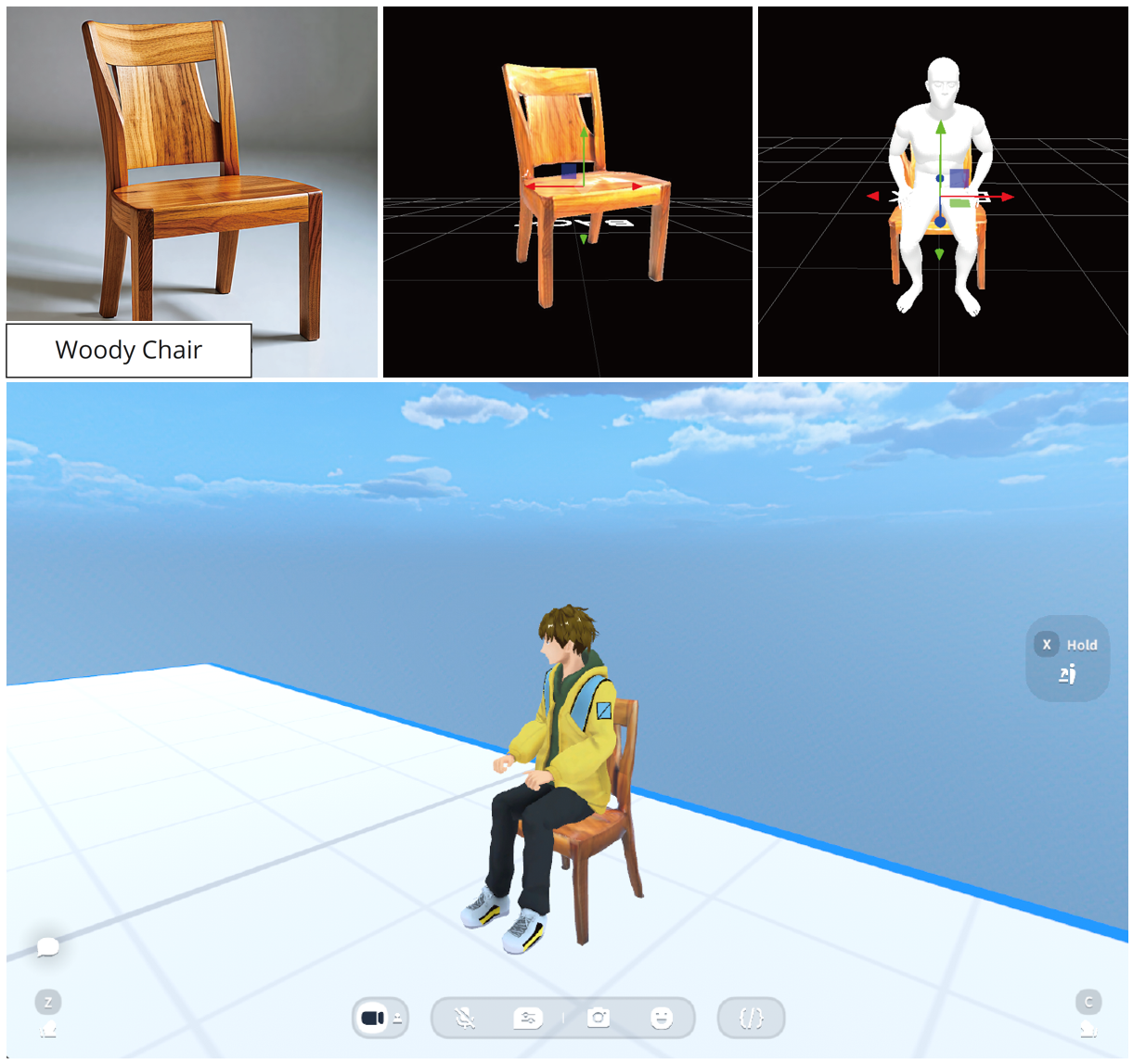

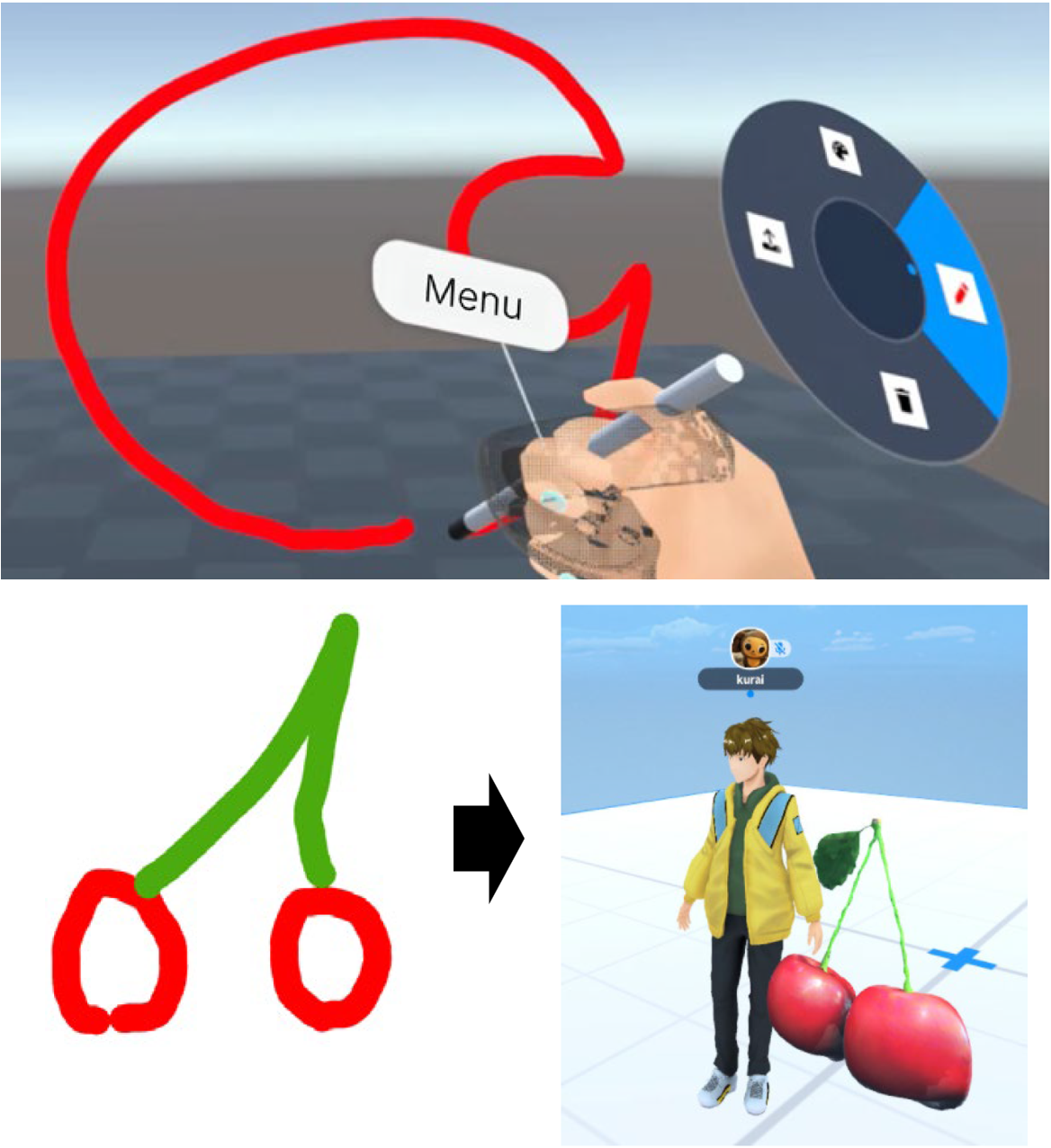

MagicCraft: Natural Language-Driven Generation of Dynamic and Interactive 3D Objects for Commercial Metaverse PlatformsRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquía-Hernández, Hideaki Uchiyama, and Kiyoshi KiyokawaIEEE Access, 2025Metaverse platforms are rapidly evolving to provide immersive spaces for user interaction and content creation. However, the generation of dynamic and interactive 3D objects remains challenging due to the need for advanced 3D modeling and programming skills. To address this challenge, we present MagicCraft, a system that generates functional 3D objects from natural language prompts for metaverse platforms. MagicCraft uses generative AI models to manage the entire content creation pipeline: converting user text descriptions into images, transforming images into 3D models, predicting object behavior, and assigning necessary attributes and scripts. It also provides an interactive interface for users to refine generated objects by adjusting features such as orientation, scale, seating positions, and grip points. Implemented on Cluster, a commercial metaverse platform, MagicCraft was evaluated by 7 expert CG designers and 51 general users. Results show that MagicCraft significantly reduces the time and skill required to create 3D objects. Users with no prior experience in 3D modeling or programming successfully created complex, interactive objects and deployed them in the metaverse. Expert feedback highlighted the system’s potential to improve content creation workflows and support rapid prototyping. By integrating AI-generated content into metaverse platforms, MagicCraft makes 3D content creation more accessible.

@article{kurai2025magiccraft, author = {Kurai, Ryutaro and Hiraki, Takefumi and Hiroi, Yuichi and Hirao, Yutaro and Perusquía-Hernández, Monica and Uchiyama, Hideaki and Kiyokawa, Kiyoshi}, journal = {IEEE Access}, title = {MagicCraft: Natural Language-Driven Generation of Dynamic and Interactive 3D Objects for Commercial Metaverse Platforms}, year = {2025}, volume = {13}, number = {}, pages = {132459-132474}, keywords = {Three-dimensional displays;Metaverse;Solid modeling;Generative AI;Codes;Programming;Natural languages;Biological system modeling;Visualization;Pipelines;Metaverse;3D object generation;generative AI;AI-assisted design}, doi = {10.1109/ACCESS.2025.3587232}, } - IEEE Access

MagicItem: Dynamic Behavior Design of Virtual Objects With Large Language Models in a Commercial Metaverse PlatformRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquia-Hernandez, Hideki Uchiyama, and Kiyoshi KiyokawaIEEE Access, 2025

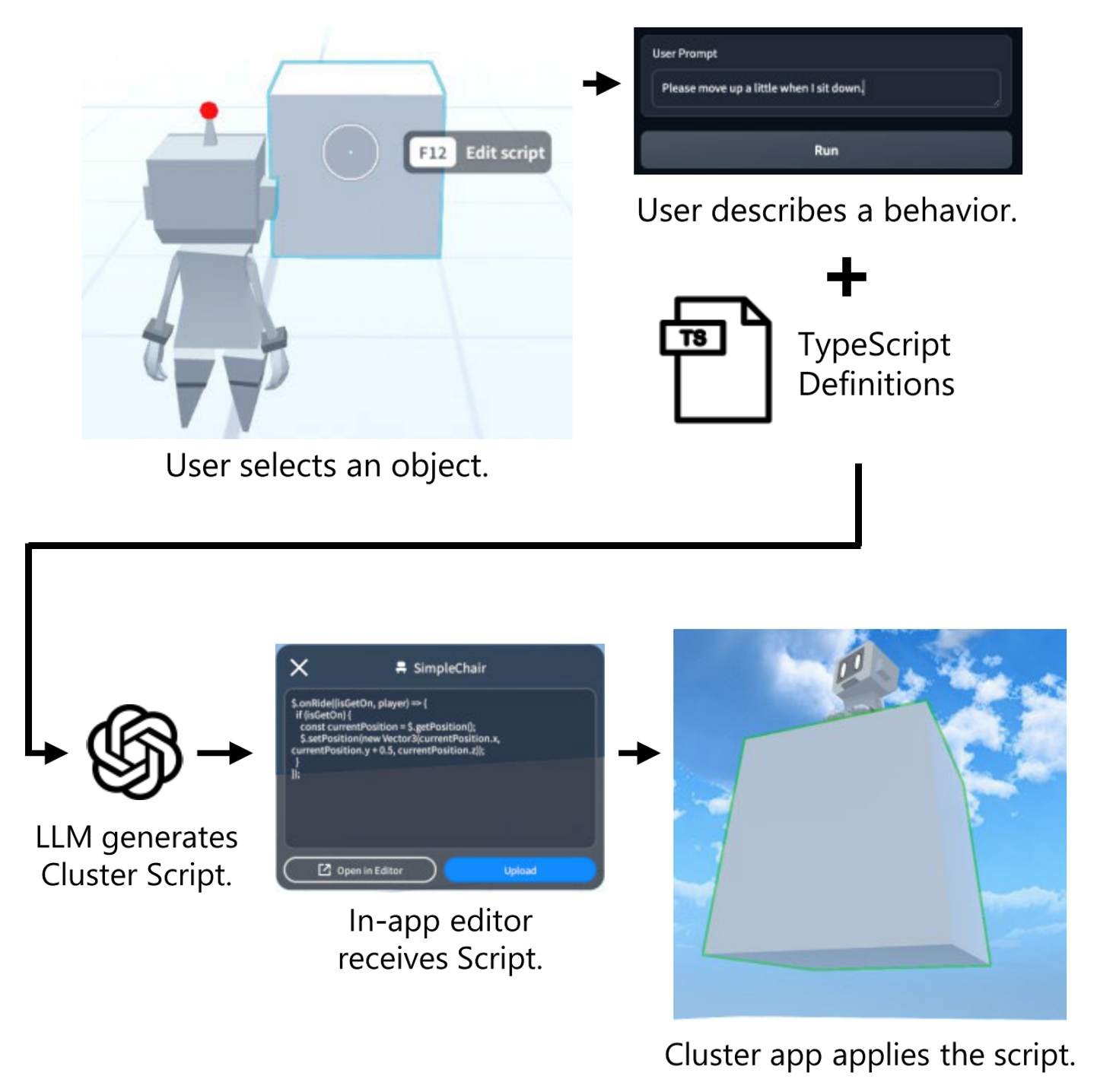

MagicItem: Dynamic Behavior Design of Virtual Objects With Large Language Models in a Commercial Metaverse PlatformRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquia-Hernandez, Hideki Uchiyama, and Kiyoshi KiyokawaIEEE Access, 2025To create rich experiences in virtual reality (VR) environments, it is essential to define the behavior of virtual objects through programming. However, programming in 3D spaces requires a wide range of background knowledge and programming skills. Although Large Language Models (LLMs) have provided programming support, they are still primarily aimed at programmers. In metaverse platforms, where many users inhabit VR spaces, most users are unfamiliar with programming, making it difficult for them to modify the behavior of objects in the VR environment easily. Existing LLM-based script generation methods for VR spaces require multiple lengthy iterations to implement the desired behaviors and are difficult to integrate into the operation of metaverse platforms. To address this issue, we propose a tool that generates behaviors for objects in VR spaces from natural language within Cluster, a metaverse platform with a large user base. By integrating LLMs with the Cluster Script provided by this platform, we enable users with limited programming experience to define object behaviors within the platform freely. We have also integrated our tool into a commercial metaverse platform and are conducting online experiments with 63 general users of the platform. The experiments show that even users with no programming background can successfully generate behaviors for objects in VR spaces, resulting in a highly satisfying system. Our research contributes to democratizing VR content creation by enabling non-programmers to design dynamic behaviors for virtual objects in metaverse platforms.

@article{kurai2025magicitem, author = {Kurai, Ryutaro and Hiraki, Takefumi and Hiroi, Yuichi and Hirao, Yutaro and Perusquia-Hernandez, Monica and Uchiyama, Hideki and Kiyokawa, Kiyoshi}, journal = {IEEE Access}, title = {MagicItem: Dynamic Behavior Design of Virtual Objects With Large Language Models in a Commercial Metaverse Platform}, year = {2025}, volume = {13}, number = {}, pages = {19132-19143}, doi = {10.1109/ACCESS.2025.3530439}, keywords = {Metaverse;Codes;Three-dimensional displays;Large language models;Natural languages;Aerospace electronics;Web servers;Usability;Programming profession;Hands;Large-language model;low-code programming;metaverse platform;virtual reality}, } - IEEE TVCG

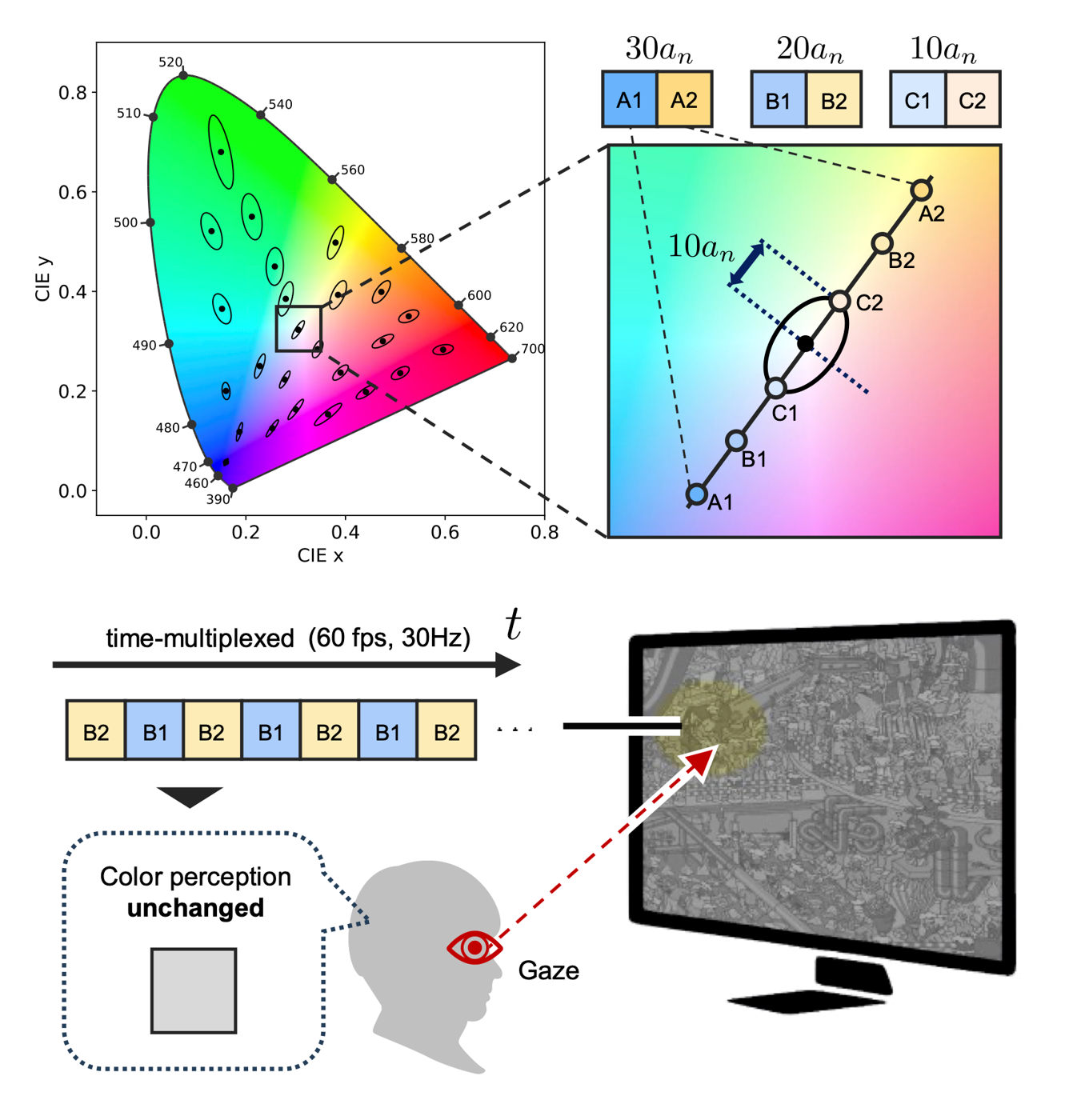

ChromaGazer: Unobtrusive Visual Modulation using Imperceptible Color Vibration for Visual GuidanceIEEE Transactions on Visualization and Computer Graphics, 2025

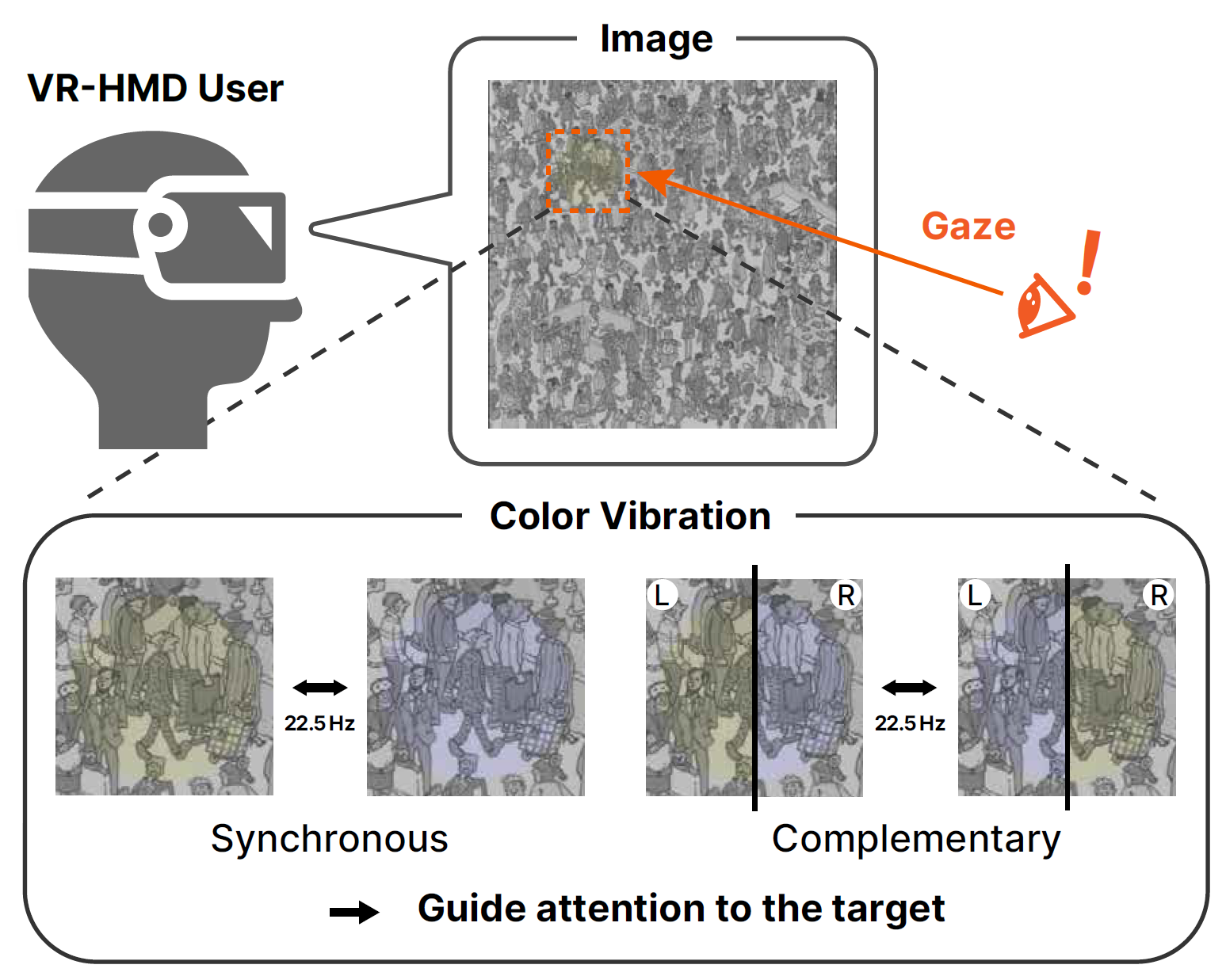

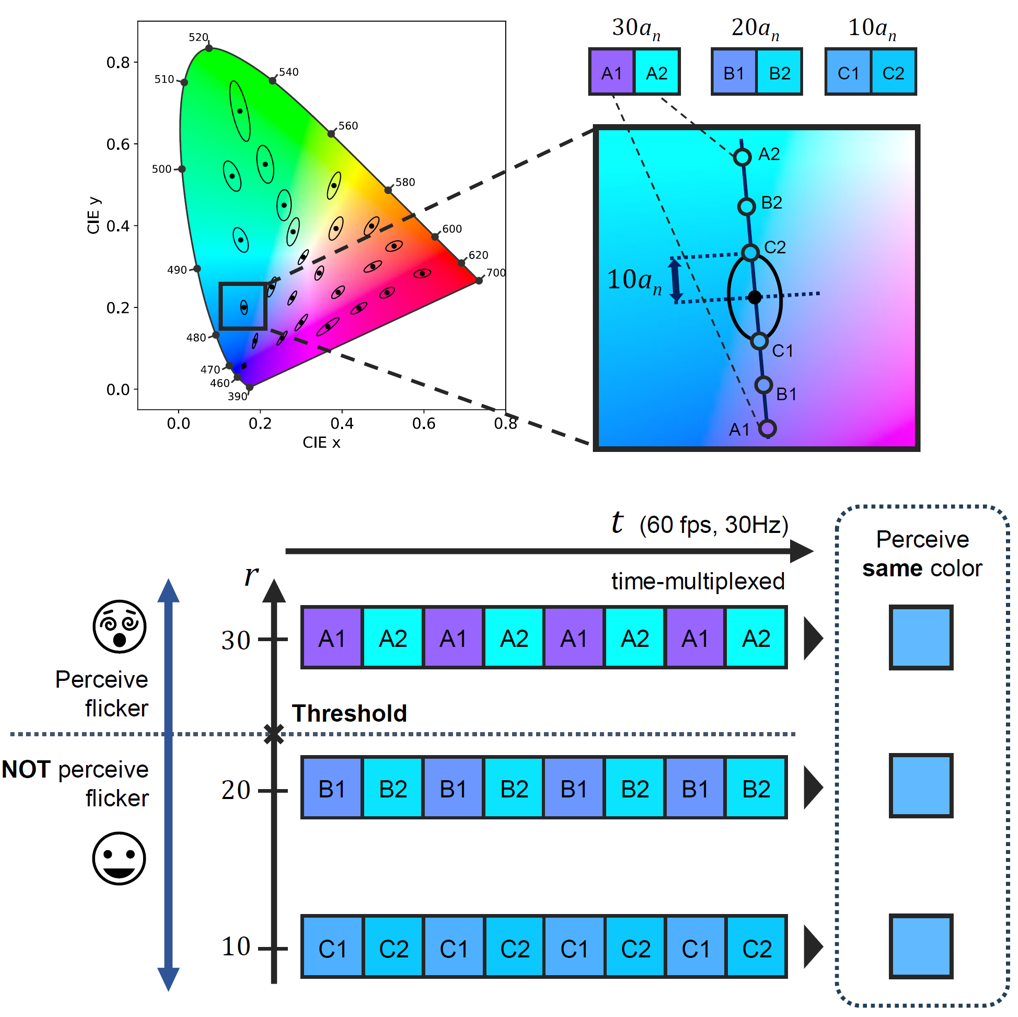

ChromaGazer: Unobtrusive Visual Modulation using Imperceptible Color Vibration for Visual GuidanceIEEE Transactions on Visualization and Computer Graphics, 2025Visual guidance (VG) plays an essential role in directing user attention in virtual reality (VR) and augmented reality (AR) environments. However, traditional approaches rely on explicit visual annotations, which often compromise visual clarity and increase user cognitive load. To address this issue, we propose an unobtrusive VG technique based on color vibration, a phenomenon in which rapidly alternating colors at frequencies above 25 Hz are perceived as a single intermediate color. Our work explores a perceptual state that exists between complete color fusion and visible flicker, where color differences remain detectable without conscious awareness of vibration. Through two experimental studies, we first identified the thresholds separating complete fusion, this intermediate perceptual state, and visible flicker by systematically varying color vibration parameters. Subsequently, we applied color vibrations with derived thresholds to natural image regions and validated their attention-guiding capabilities using eye-tracking measurements. The results demonstrate that controlled color vibration successfully directs user attention while maintaining low cognitive demand, providing an effective method for implementing unobtrusive VG in VR and AR systems.

@article{tosa2025chromagazer, author = {Tosa, Rinto and Hattori, Shingo and Hiroi, Yuichi and Itoh, Yuta and Hiraki, Takefumi}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {ChromaGazer: Unobtrusive Visual Modulation using Imperceptible Color Vibration for Visual Guidance}, year = {2025}, volume = {31}, number = {5}, pages = {3450-3458}, keywords = {Visual Guidance;Imperceptible Color Vibration;Color Perception;Augmented Reality}, doi = {10.1109/TVCG.2025.3549173} } - ACM VRST

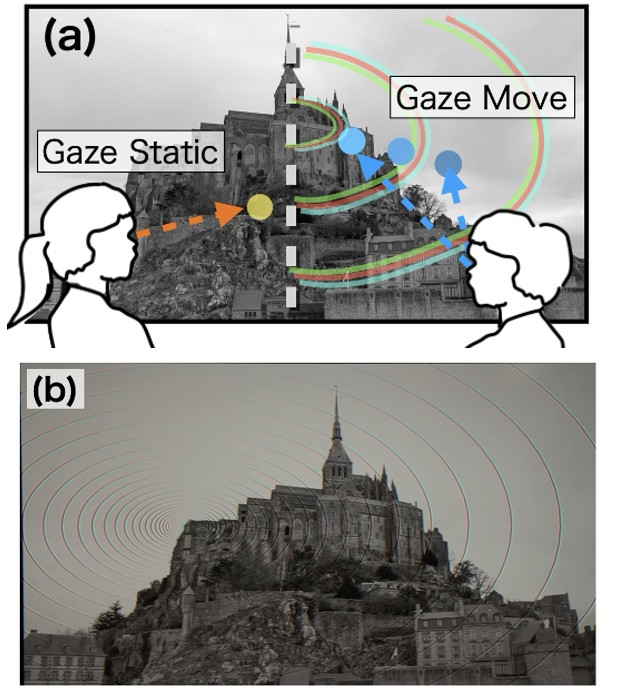

Saccaidance: Saccade-Aware Pattern Embedding for Gaze Guidance on High-Speed DisplaysIn Proceedings of the 2025 31st ACM Symposium on Virtual Reality Software and Technology, 2025

Saccaidance: Saccade-Aware Pattern Embedding for Gaze Guidance on High-Speed DisplaysIn Proceedings of the 2025 31st ACM Symposium on Virtual Reality Software and Technology, 2025Gaze guidance is essential for directing user attention to specific areas of interest. However, conventional visual cues generate persistent visual noise that hinders concentration during tasks. We propose Saccaidance, a gaze-guidance method that appears only when users move their gaze. Saccaidance employs temporal additive color mixing and 480 Hz high-speed displays to shift the color phase of guidance patterns. This renders the patterns barely visible during fixation and makes them appear transiently when users move their gaze as a color-breaking effect. This intermittent gaze guidance appears only during gaze transitions, providing effective guidance without interfering with focused work or requiring eye-tracking hardware. We conducted experiments with 24 participants under four conditions that involved search tasks: an unmodified baseline, conventional explicit guidance, and our proposed method using oval and radial patterns. The results show that our approach effectively constrains the exploration area while preserving subjective naturalness. We also outline application scenarios of our method, including document highlighting.

@inproceedings{nara2025saccaidance, author = {Nara, Masahiro and Miyazaki, Ryusuke and Hiroi, Yuichi and Hiraki, Takefumi and Itoh, Yuta and Miyafuji, Shio}, title = {Saccaidance: Saccade-Aware Pattern Embedding for Gaze Guidance on High-Speed Displays}, year = {2025}, isbn = {9798400721182}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3756884.3766051}, doi = {10.1145/3756884.3766051}, booktitle = {Proceedings of the 2025 31st ACM Symposium on Virtual Reality Software and Technology}, articleno = {36}, numpages = {11}, keywords = {Gaze Guidance, Temporal Additive Color Mixing, Color Vibration, Augmented Reality}, series = {VRST '25}, } - ACM ICMI

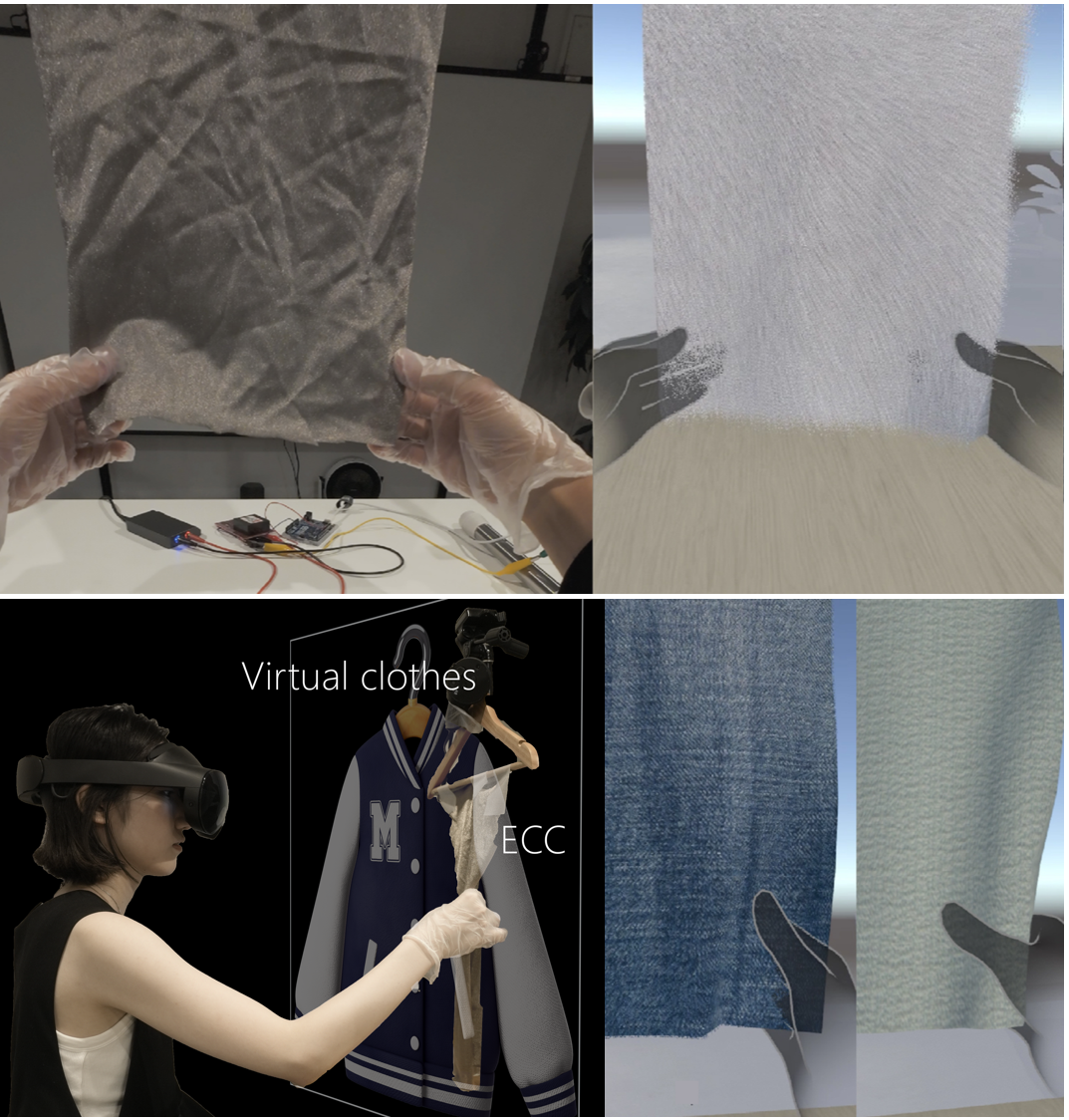

Pinching Visuo-haptic Display: Investigating Cross-Modal Effects of Visual Textures on Electrostatic Cloth Tactile SensationsIn Proceedings of the 27th International Conference on Multimodal Interaction, 2025

Pinching Visuo-haptic Display: Investigating Cross-Modal Effects of Visual Textures on Electrostatic Cloth Tactile SensationsIn Proceedings of the 27th International Conference on Multimodal Interaction, 2025Tactile representations of fabrics are critical in virtual retail and VR fitting applications. A pinching tactile display (PTD) using electrostatic adhesion is a fabric-type display that can flexibly adjust tactile sensations by adjusting voltage and frequency, and is expected to be applied to the remote transmission of fabric tactile sensations. However, no significant differences in tactile sensation other than roughness were observed. Building on established research in cross-modal perception, we investigated whether overlaying visual fabric textures could alter the tactile expressiveness of PTDs. We developed a system that integrated a systematically controlled conductive fabric-based PTD with spatially registered VR fabric simulations. Participants rated four tactile properties (roughness, stiffness, thickness, warmth) while touching the same conductive substrate under different visual texture conditions (denim, gauze, toweling, voile). The results showed that voile texture significantly affected stiffness (p=.00887); toweling texture tended to affect warmth (p=.0658); and denim texture tended to affect roughness (p=.0972). These results suggest that virtual texture overlay can affect the warmth, stiffness, and roughness of the PTD. Since the PTD can only change roughness, these results suggest that texture overlay enables the display of tactile sensations that the PTD alone cannot express. On the other hand, no significant influence of texture on thickness was observed. This suggests the pinching motion provided a highly reliable tactile cue, which may have led to visual information being underrated and thus not reaching a significant level. These findings offer strategic insights for employing visual textures to enhance perceived fabric properties, paving the way for deployment in virtual fashion retail and VR fitting applications.

@inproceedings{kitagishi2025pinching, author = {Kitagishi, Takekazu and Ooi, Chun Wei and Hiroi, Yuichi and Rekimoto, Jun}, title = {Pinching Visuo-haptic Display: Investigating Cross-Modal Effects of Visual Textures on Electrostatic Cloth Tactile Sensations}, year = {2025}, isbn = {9798400714993}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, doi = {10.1145/3716553.3750810}, booktitle = {Proceedings of the 27th International Conference on Multimodal Interaction}, pages = {624–633}, numpages = {10}, keywords = {MultiModal; Cross-modal Effect; VR; Physical Simulation; Haptic Display;}, series = {ICMI '25}, } - IEEE ISEMV

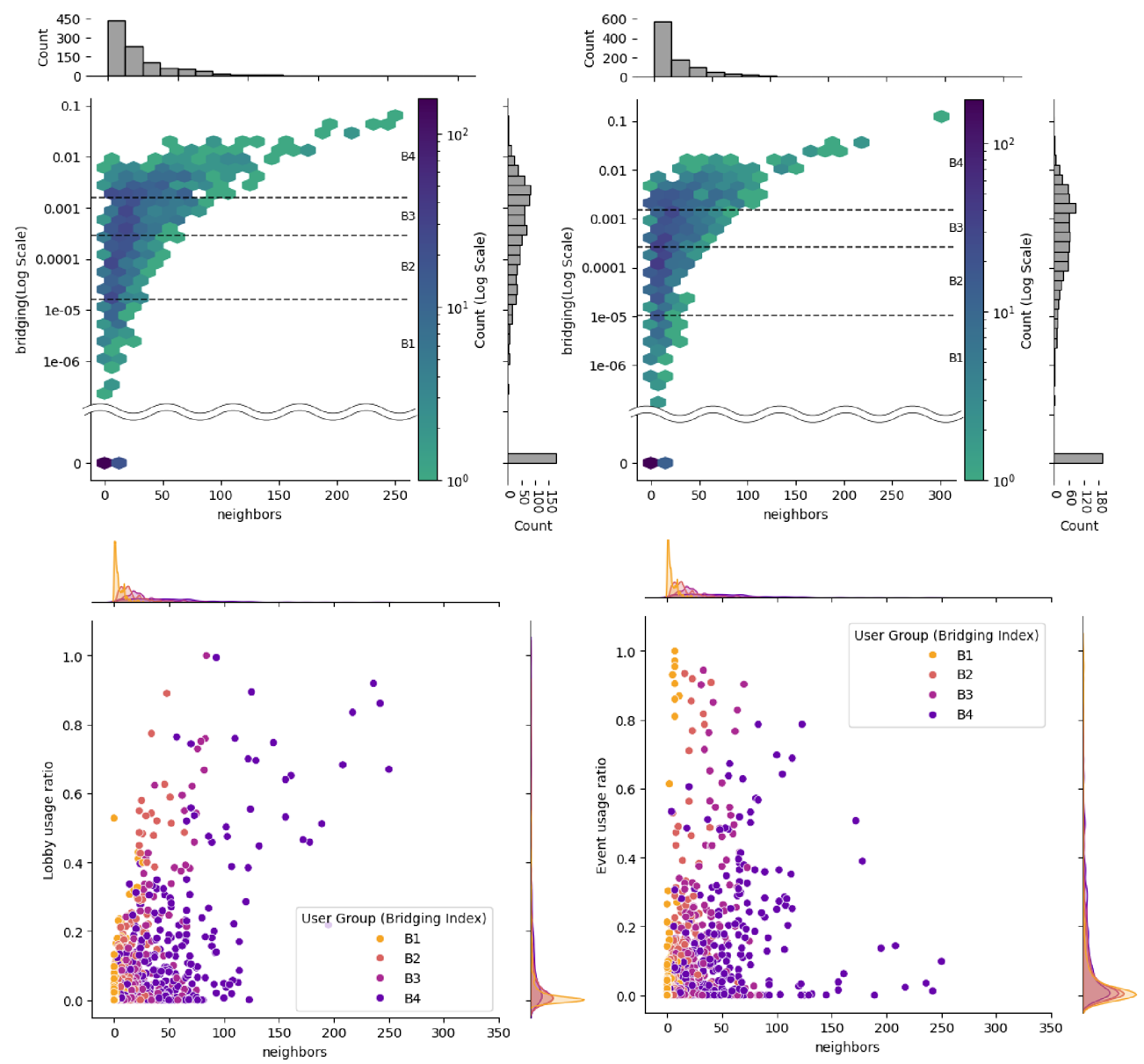

Community Analysis of Social Virtual Reality Based on Large-Scale Log Data of a Commercial Metaverse PlatformHiroto Tsutsui, Takefumi Hiraki, Yuichi Hiroi, and Shoichi HasegawaIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025

Community Analysis of Social Virtual Reality Based on Large-Scale Log Data of a Commercial Metaverse PlatformHiroto Tsutsui, Takefumi Hiraki, Yuichi Hiroi, and Shoichi HasegawaIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025This study quantitatively analyzes the structural characteristics of user communities within Social Virtual Reality (Social VR) platforms supporting head-mounted displays (HMDs), based on large-scale log data. By detecting and evaluating community structures from data on substantial interactions (defined as prolonged co-presence in the same virtual space), we found that Social VR platforms tend to host numerous, relatively small communities characterized by strong internal cohesion and limited inter-community connections. This finding contrasts with the large-scale, broadly connected community structures typically observed in conventional Social Networking Services (SNS). Furthermore, we identified a user segment capable of mediating between communities, despite these users not necessarily having numerous direct connections. We term this user segment ‘community hoppers’ and discuss their characteristics. These findings contribute to a deeper understanding of the community structures that emerge within the unique communication environment of Social VR and the roles users play within them.

@inproceedings{tsutsui2025communityanalysis, title = {Community Analysis of Social Virtual Reality Based on Large-Scale Log Data of a Commercial Metaverse Platform}, author = {Tsutsui, Hiroto and Hiraki, Takefumi and Hiroi, Yuichi and Hasegawa, Shoichi}, year = {2025}, booktitle = {Proceedings of 2025 IEEE International Symposium on Emergence Metaverse}, month = oct, } - IEEE ISEMV

Implementation and Application of Multi-Format 3D Data Integration in a Cross-Device Commercial Metaverse PlatformMasanori Ibara, Yuichi Hiroi, Takushi Kamegai, and Takefumi HirakiIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025

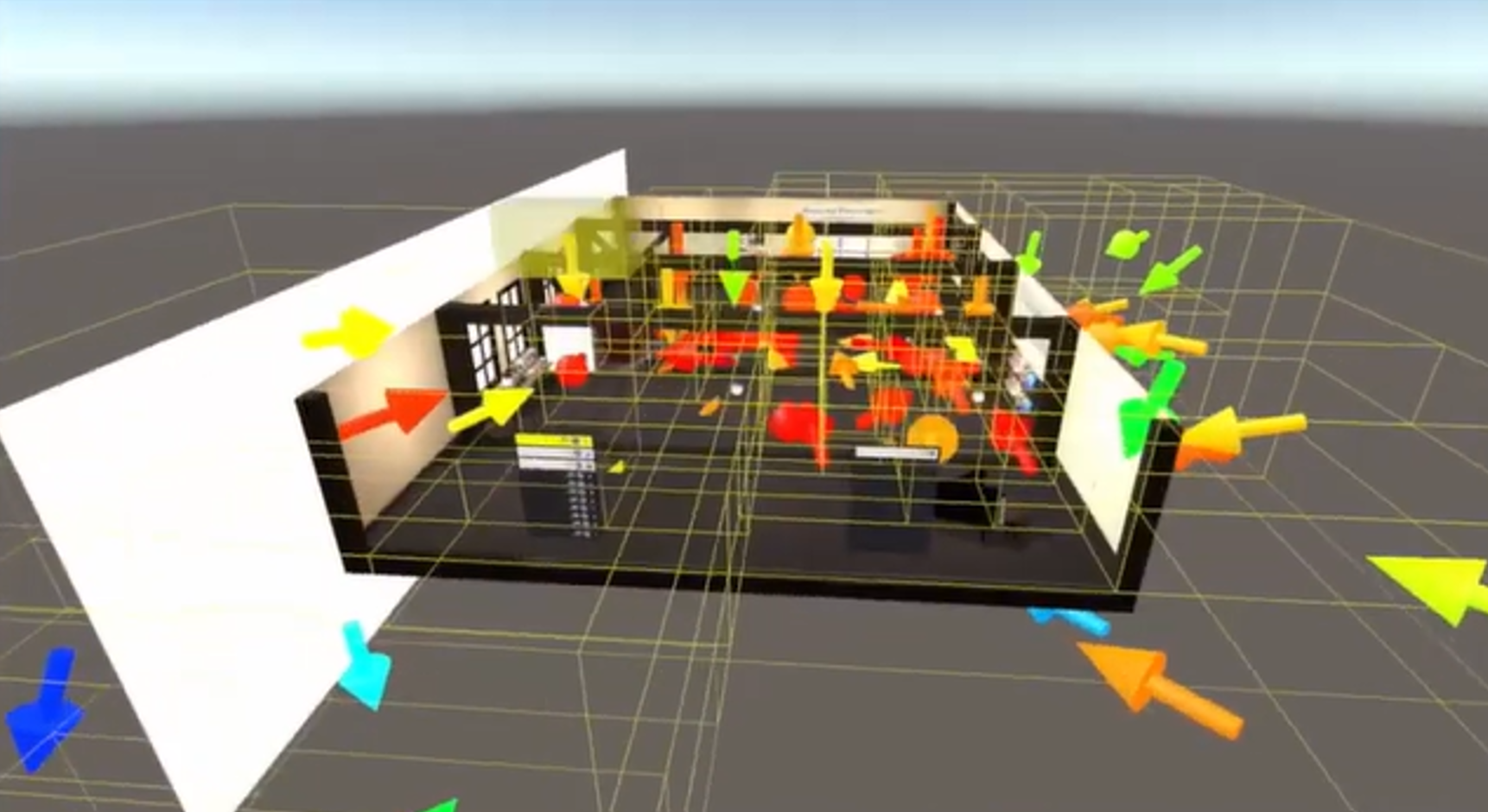

Implementation and Application of Multi-Format 3D Data Integration in a Cross-Device Commercial Metaverse PlatformMasanori Ibara, Yuichi Hiroi, Takushi Kamegai, and Takefumi HirakiIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025Traditionally, specialized 3D design data, such as BIM and CAD, have been accessible only to a select group of experts, creating significant barriers that prevent general users from participating in decision-making processes. This paper provides a systematic overview of practical insights for utilizing 3D data in industrial and architectural domains by presenting implementation cases of the industrial metaverse on Cluster, a commercial cross-device metaverse platform. This paper analyzes the characteristics and constraints of major data formats in the industrial and architectural fields and organizes integration workflows for the metaverse. Through application cases utilizing 3D data across multiple domains, we present practical examples of collaborative decision-making support enabled by the fusion of metaverse and digital twin technologies. Specifically, we demonstrate that multi-device access and simultaneous multi-user participation capabilities foster democratic environments in the industrial metaverse, which are challenging to achieve with conventional, expert-dependent systems.

@inproceedings{ibara2025implementation, title = {Implementation and Application of Multi-Format 3D Data Integration in a Cross-Device Commercial Metaverse Platform}, author = {Ibara, Masanori and Hiroi, Yuichi and Kamegai, Takushi and Hiraki, Takefumi}, year = {2025}, booktitle = {Proceedings of 2025 IEEE International Symposium on Emergence Metaverse}, month = oct, } - IEEE ISEMV

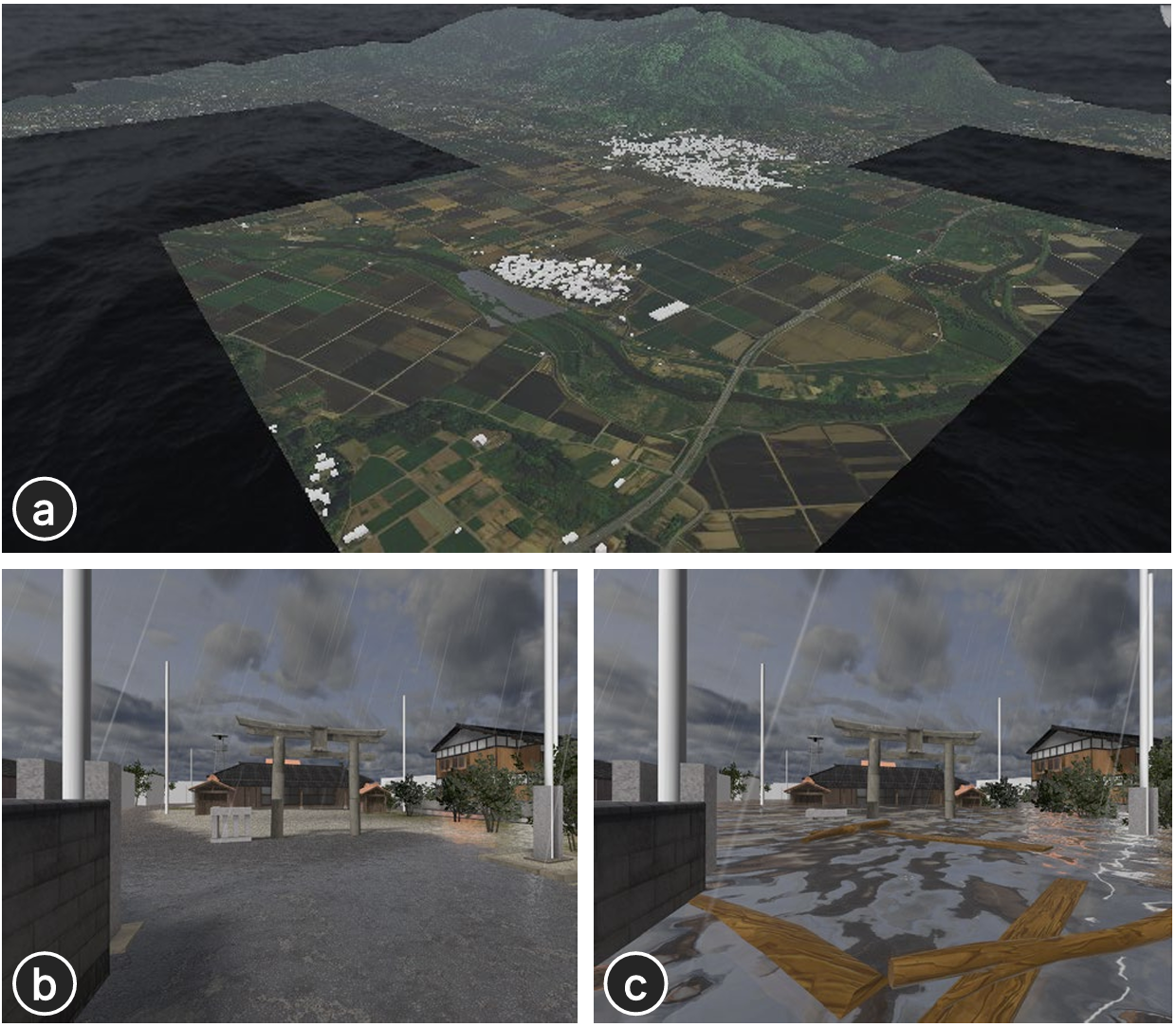

Weather Synchronization in Digital Twin Environments for Shared VR Experience Using Commercial Metaverse PlatformsMasanori Ibara, Yuichi Hiroi, Takushi Kamegai, Yusuke Masubuchi, Kazuki Matsutani, Megumi Zaizen, Junya Morita, and Takefumi HirakiIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025

Weather Synchronization in Digital Twin Environments for Shared VR Experience Using Commercial Metaverse PlatformsMasanori Ibara, Yuichi Hiroi, Takushi Kamegai, Yusuke Masubuchi, Kazuki Matsutani, Megumi Zaizen, Junya Morita, and Takefumi HirakiIn Proceedings of 2025 IEEE International Symposium on Emergence Metaverse, Oct 2025@inproceedings{ibara2025weather, author = {Ibara, Masanori and Hiroi, Yuichi and Kamegai, Takushi and Masubuchi, Yusuke and Matsutani, Kazuki and Zaizen, Megumi and Morita, Junya and Hiraki, Takefumi}, title = {Weather Synchronization in Digital Twin Environments for Shared VR Experience Using Commercial Metaverse Platforms}, booktitle = {Proceedings of 2025 IEEE International Symposium on Emergence Metaverse}, year = {2025}, volume = {}, number = {}, pages = {to appear}, month = oct, } - IEEE ISMAR

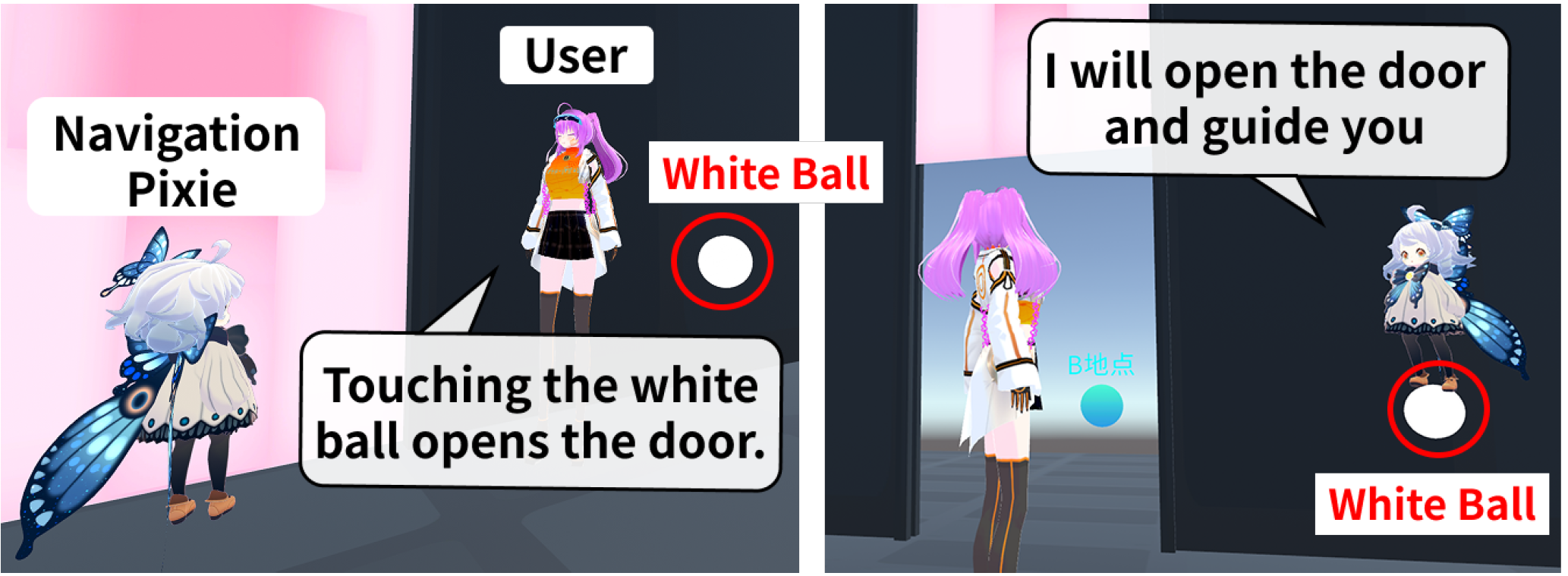

Navigation Pixie: Implementation and Empirical Study Toward On-demand Navigation Agents in Commercial MetaverseIn Proceedings of 2025 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Oct 2025

Navigation Pixie: Implementation and Empirical Study Toward On-demand Navigation Agents in Commercial MetaverseIn Proceedings of 2025 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Oct 2025While commercial metaverse platforms offer diverse user-generated content, they lack effective navigation assistance that can dynamically adapt to users’ interests and intentions. Although previous research has investigated on-demand agents in controlled environments, implementation in commercial settings with diverse world configurations and platform constraints remains challenging.We present Navigation Pixie, an on-demand navigation agent employing a loosely coupled architecture that integrates structured spatial metadata with LLM-based natural language processing while minimizing platform dependencies, which enables experiments on the extensive user base of commercial metaverse platforms. Our cross-platform experiments on commercial metaverse platform Cluster with 99 PC client and 94 VR-HMD participants demonstrated that Navigation Pixie significantly increased dwell time and free exploration compared to fixed-route and no-agent conditions across both platforms. Subjective evaluations revealed consistent on-demand preferences in PC environments versus context-dependent social perception advantages in VR-HMD. This research contributes to advancing VR interaction design through conversational spatial navigation agents, establishes cross-platform evaluation methodologies revealing environment-dependent effectiveness, and demonstrates empirical experimentation frameworks for commercial metaverse platforms.

@inproceedings{yanagawa2025navigationpixie, title = {Navigation Pixie: Implementation and Empirical Study Toward On-demand Navigation Agents in Commercial Metaverse}, author = {Yanagawa, Hikari and Hiroi, Yuichi and Tokida, Satomi and Hatada, Yuji and Hiraki, Takefumi}, year = {2025}, booktitle = {Proceedings of 2025 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)}, month = oct, } - Augmented Humans

ChromaGazer-HMD: Visual Modulation using Unobtrusive Color Vibration for Gaze Guidance with Head-Mounted DisplaysIn Proceedings of the Augmented Humans International Conference 2025, Mar 2025

ChromaGazer-HMD: Visual Modulation using Unobtrusive Color Vibration for Gaze Guidance with Head-Mounted DisplaysIn Proceedings of the Augmented Humans International Conference 2025, Mar 2025VR content often involves vast spaces for users to explore, making it uncertain whether they will notice areas or objects highlighted by content creators. While various methods for guiding users’ gaze have been proposed, those that significantly alter the appearance of the content can reduce its naturalness and negatively impact the user experience. In this study, we explored a Visual Guidance (VG) approach using color vibration, based on the human visual system’s inability to perceive rapid chromatic changes. We evaluated its applicability to HMD environments and introduced a method called complementary color vibration, where opposite-phase vibrations are applied to the left and right displays of the HMD. We further investigated optimal parameters for VG and assessed its effectiveness in search tasks. Our experiments revealed that color vibration in HMD environments significantly reduced search times and areas compared to conditions without guidance, while maintaining the naturalness of the content. Furthermore, complementary color vibration was found to preserve naturalness significantly better than conventional synchronous color vibration. These findings indicate that VG using color vibration is effective in HMD environments and that complementary color vibration is less obtrusive than traditional synchronous color vibration.

@inproceedings{shen2025chromagazerhmd, author = {Shen, Youfang and Tosa, Rinto and Hatada, Yuji and Hiroi, Yuichi and Hiraki, Takefumi and Naemura, Takeshi}, title = {ChromaGazer-HMD: Visual Modulation using Unobtrusive Color Vibration for Gaze Guidance with Head-Mounted Displays}, year = {2025}, isbn = {9798400715662}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, doi = {10.1145/3745900.3746090}, booktitle = {Proceedings of the Augmented Humans International Conference 2025}, pages = {43–54}, numpages = {12}, month = mar, } - IEEE VR

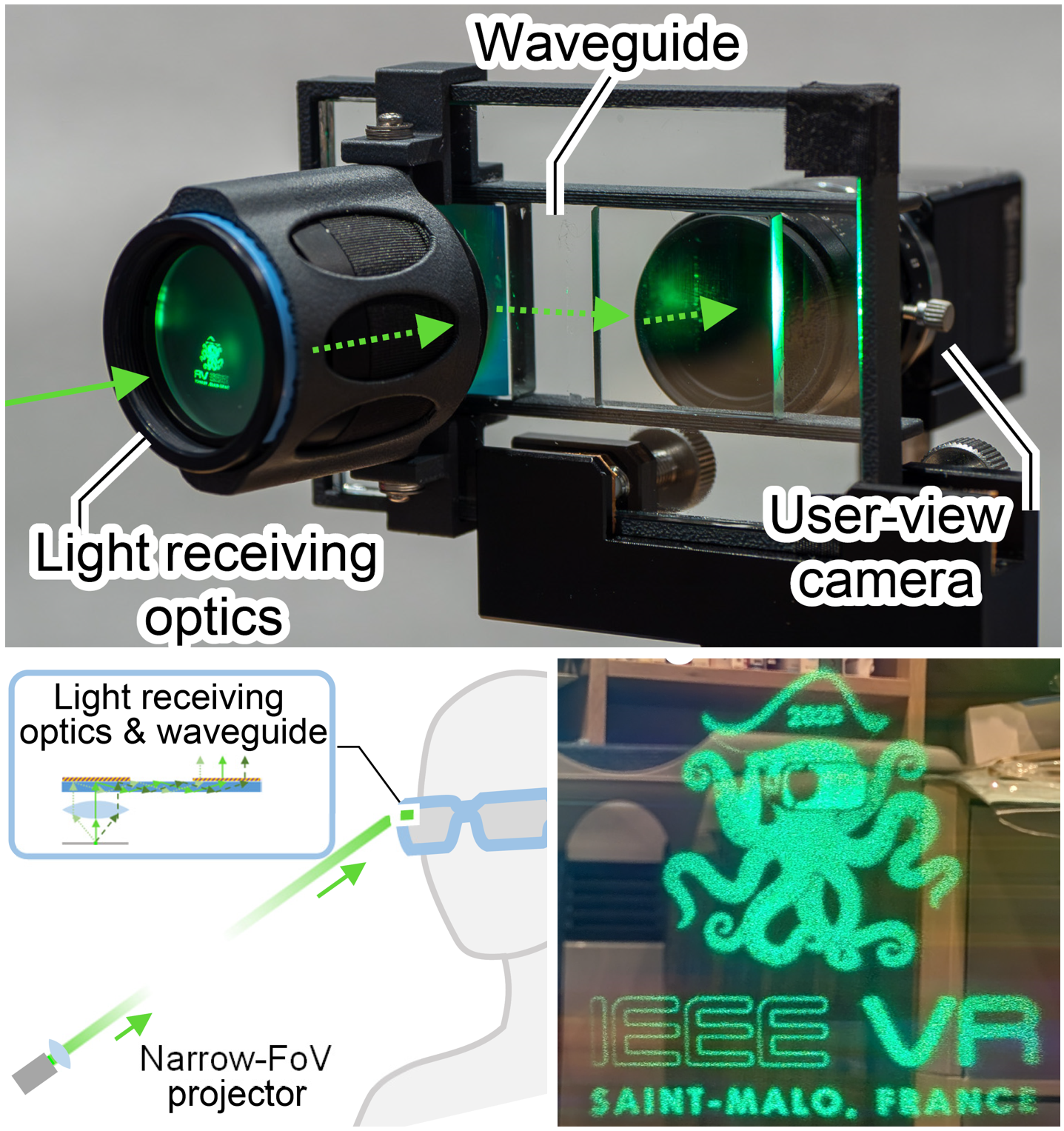

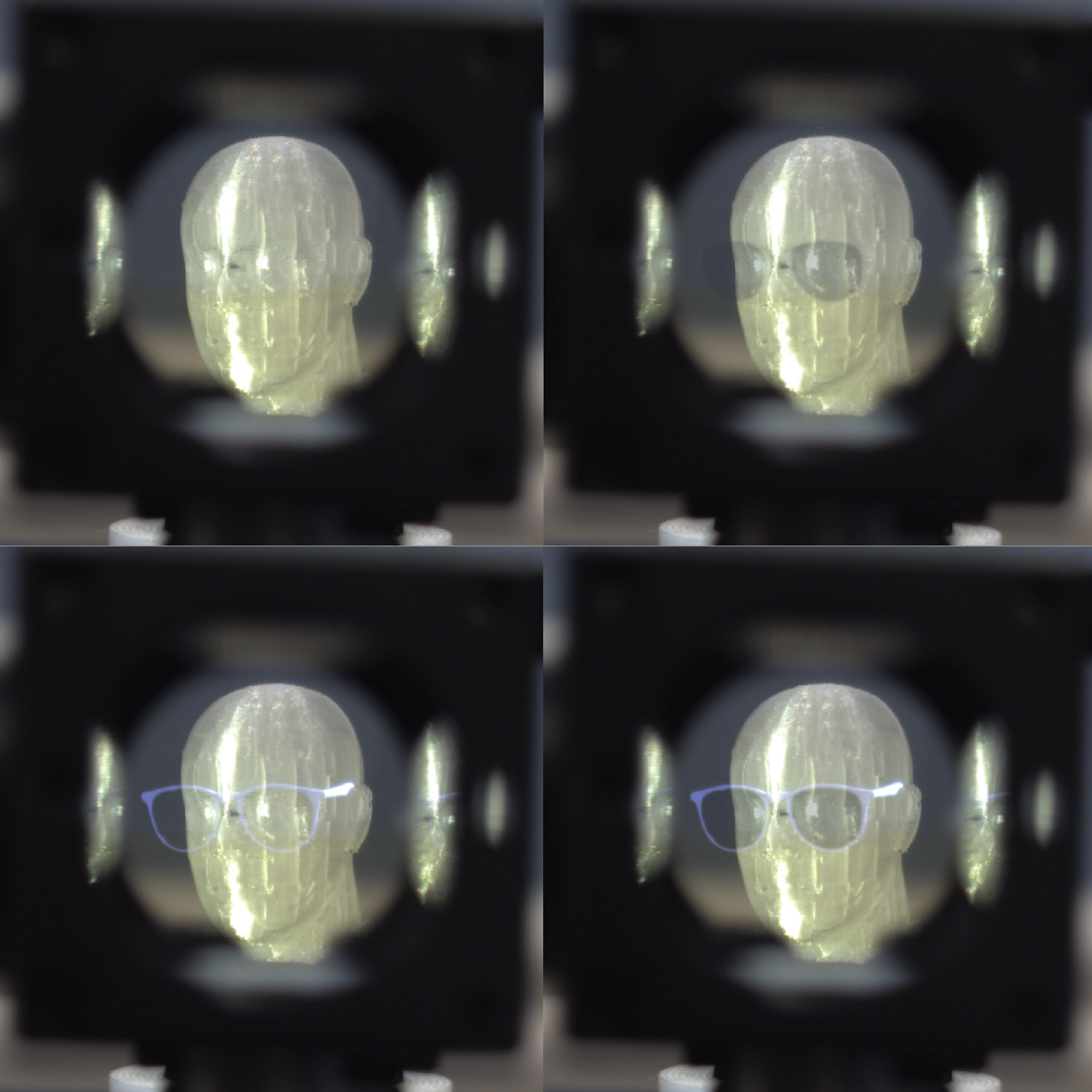

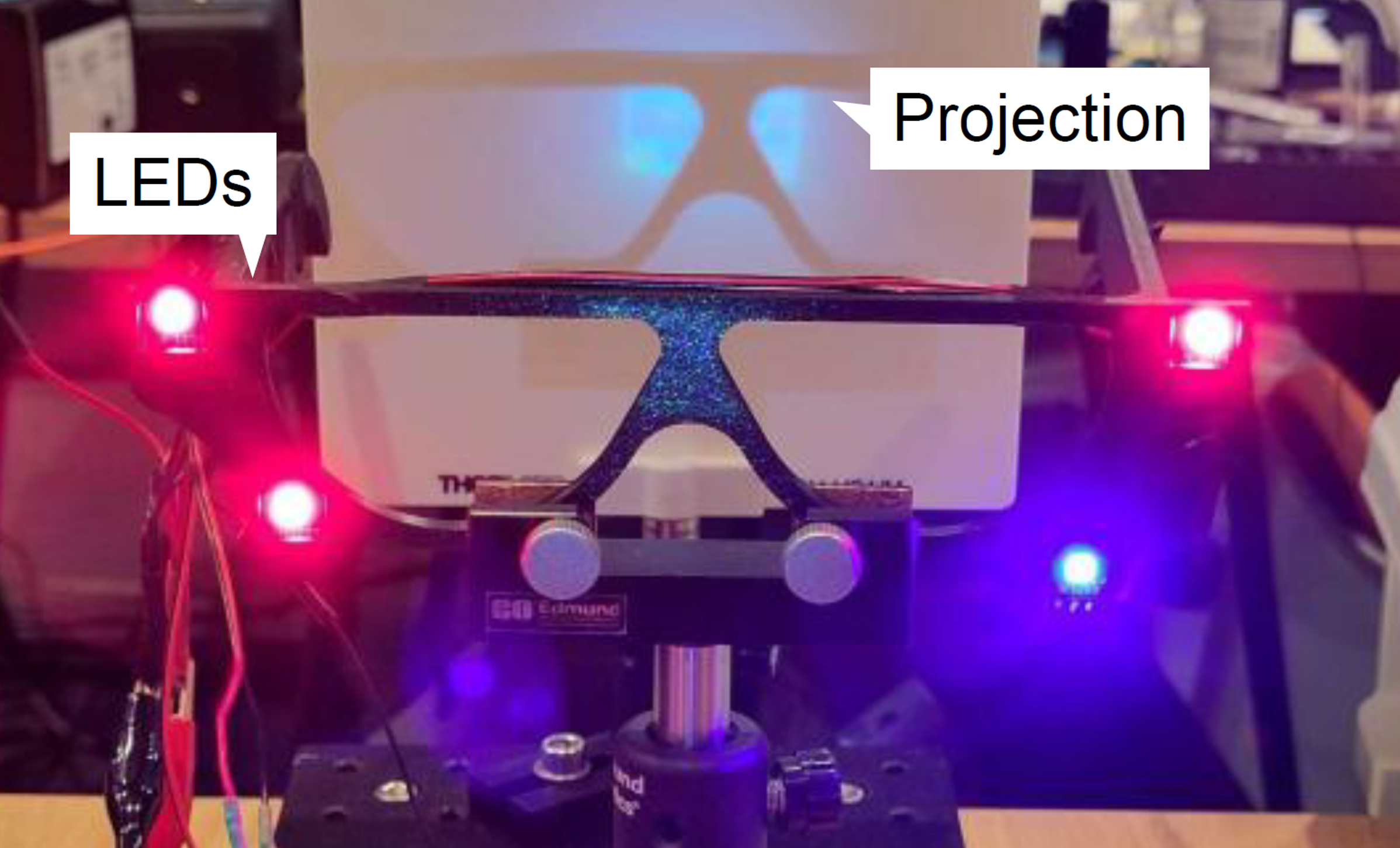

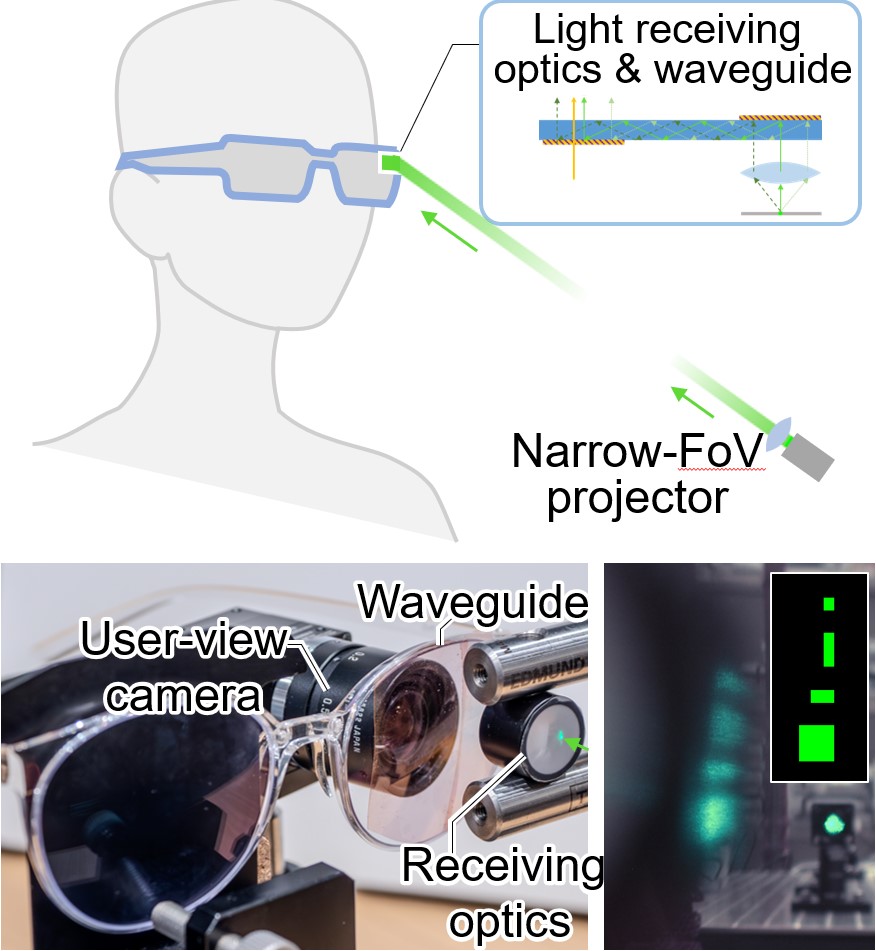

Slim Diffractive Waveguide Glasses for Beaming Displays with Enhanced Head Orientation ToleranceIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Mar 2025

Slim Diffractive Waveguide Glasses for Beaming Displays with Enhanced Head Orientation ToleranceIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Mar 2025Augmented Reality (AR) glasses must be slim, lightweight, and energy-efficient to achieve widespread adoption. Beaming Displays present a promising solution by offloading active components, such as the power-supplied light engine, into the surrounding environment while leaving only passive elements, like the eyepiece, in the wearable device. However, existing approaches still struggle to achieve both a slim design and a wide tolerance for projection angles relative to the user’s head orientation. In this work, we introduce a design for light-receiving glasses using a diffractive waveguide with in-coupling and out-coupling gratings. Our approach expands the allowable range of incident angles while maintaining a compact, lightweight form factor. We developed a proof-of-concept prototype and demonstrated an incident angle tolerance of approximately 20-30 degrees range, overcoming the previous design of 5 degrees.

@inproceedings{itoh2025hoebeaming, author = {Itoh, Yuta and Nakamura, Tomoya and Hiroi, Yuichi and Aksit, Kaan}, title = {Slim Diffractive Waveguide Glasses for Beaming Displays with Enhanced Head Orientation Tolerance}, booktitle = {2025 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)}, year = {2025}, volume = {}, number = {}, pages = {351-358}, keywords = {beaming display; augmented reality; waveguide; near-eye displays; DOEs}, month = mar, doi = {10.1109/VR59515.2025.00059}, } - SA Poster

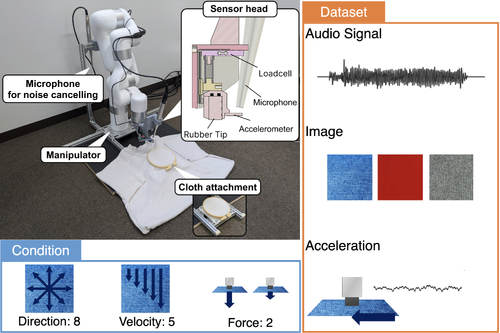

Tactile Data Recording System for Clothing with Motion-Controlled Robotic SlidingIn Proceedings of the SIGGRAPH Asia 2025 Posters, Hong Kong, Oct 2025

Tactile Data Recording System for Clothing with Motion-Controlled Robotic SlidingIn Proceedings of the SIGGRAPH Asia 2025 Posters, Hong Kong, Oct 2025The tactile sensation of clothing is critical to wearer comfort. To reveal physical properties that make clothing comfortable, systematic collection of tactile data during sliding motion is required. Recent studies demonstrate that tactile data obtained through stroking with systematically varied speeds and directions encode material properties more accurately. However, prior methods are optimized for small samples and inadequately address non-destructive recording of intact garments, limiting their scalability to large clothing databases where non-destructive measurement is essential. We propose a robotic arm-based system for collecting tactile data from clothing. The system performs stroking measurements with a simulated fingertip while precisely controlling speeds and directions without cutting clothing, thus enabling the creation of motion-labeled, multimodal tactile databases. Machine learning evaluation showed that including motion-related parameters (velocity and direction) improved identification accuracy for audio and/or acceleration, demonstrating the efficacy of motion-related labels for characterizing clothing tactile sensation.

@inproceedings{eguchi2025tactile, author = {Eguchi, Michikuni and Kitagishi, Takekazu and Hiroi, Yuichi and Hiraki, Takefumi}, title = {Tactile Data Recording System for Clothing with Motion-Controlled Robotic Sliding}, year = {2025}, isbn = {9798400721342}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3757374.3771452}, doi = {10.1145/3757374.3771452}, booktitle = {Proceedings of the SIGGRAPH Asia 2025 Posters}, articleno = {31}, numpages = {3}, keywords = {Haptics, Tactile Sensation, Textiles, Clothes, Machine Learning}, location = {Hong Kong}, series = {SA Posters '25}, month = oct, } - ISMAR Poster

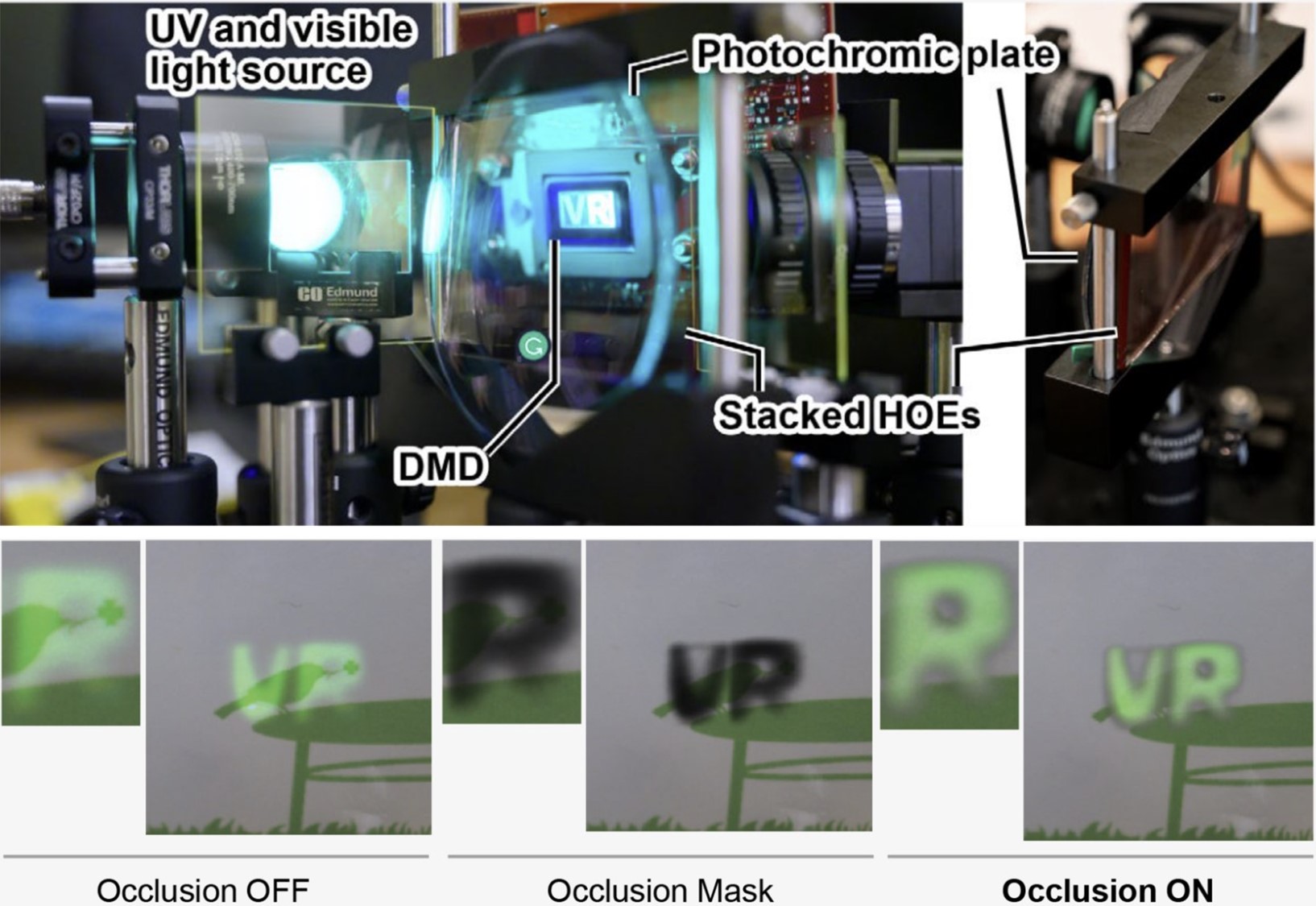

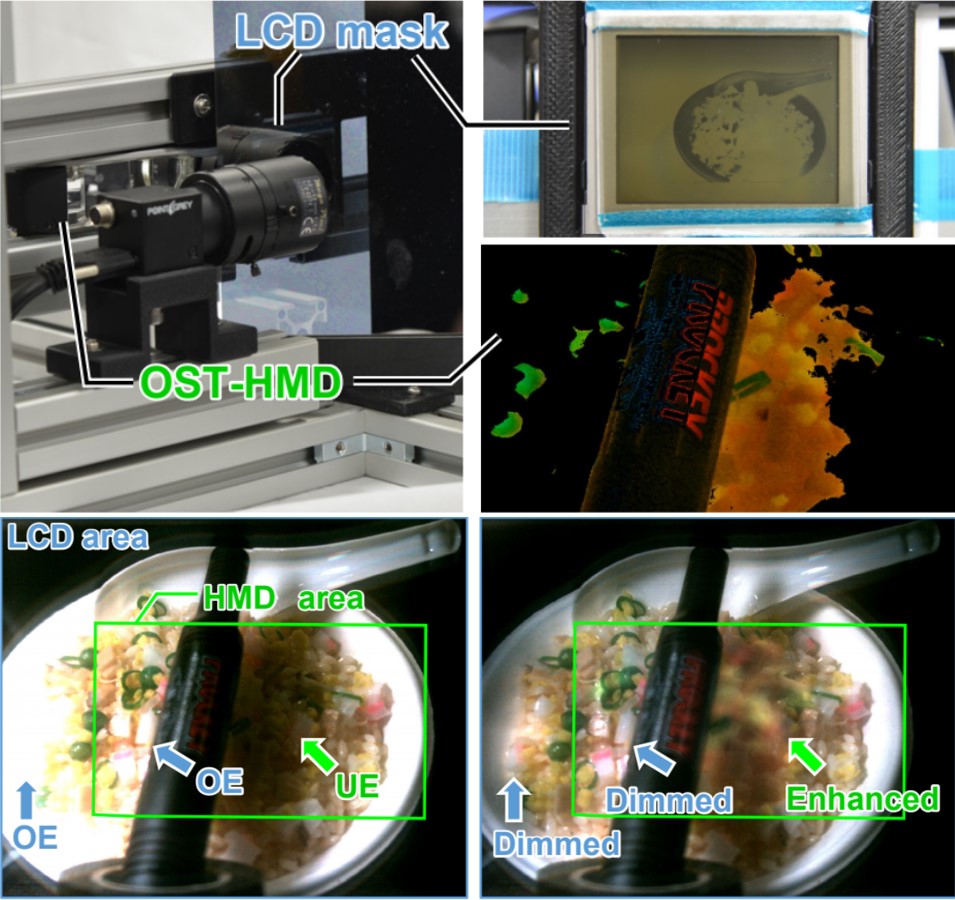

RAPHO: A Rapid Occluding Photochromic Based Occlusion-Capable DisplayIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Poster), Oct 2025

RAPHO: A Rapid Occluding Photochromic Based Occlusion-Capable DisplayIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Poster), Oct 2025Occlusion capability (OC) has been one of the important topics in designing the next-generation optical see-through near-eye displays (OST NED). OC broadens OST-NED applications including outdoor ones and improves virtual objects’ spatial perception and their perceived realism. However, conventional OC with optoelectronic masks adds bulkiness and visual artifacts in the real-world view. As an emerging direction, photochromic-based occlusion (PO) has the potential to address these issues, yet current implementations have a critical limitation in their response time. In this paper, we provide a real-time PO-OST NED based on fast-response photochromic materials, bringing it one step closer to realizing a lightweight, high transparency, and visual artifact-free occlusion solution.

@inproceedings{ooi2025rapho, author = {Ooi, Chun Wei and Hiroi, Yuichi and Hiraki, Takefumi and Itoh, Yuta}, title = {RAPHO: A Rapid Occluding Photochromic Based Occlusion-Capable Display}, booktitle = {2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Poster)}, year = {2025}, volume = {}, number = {}, pages = {to appear}, month = oct, } - ISMAR Workshop

MagicPen: Interactive Sketch-to-3D Generation in Commertial Metaverse PlatformsRyutaro Kurai, Yuji Hatada, Takefumi Hiraki, and Yuichi HiroiIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop), Oct 2025

MagicPen: Interactive Sketch-to-3D Generation in Commertial Metaverse PlatformsRyutaro Kurai, Yuji Hatada, Takefumi Hiraki, and Yuichi HiroiIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop), Oct 2025Although User Generated Content (UGC) distinguishes metaverse platforms from conventional VR, 3D content creation remains limited to users with specialized modeling expertise and platform-specific technical knowledge. While generative AI advances have improved accessibility, existing approaches fail to provide both VR-native operation and intuitive input capabilities simultaneously, maintaining barriers for novice users. We propose MagicPen, a system enabling direct conversion of hand-drawn sketches into 3D objects within VR environments through a three-stage AI pipeline comprising image refinement, 3D model generation, and metaverse integration. Evaluation with 68 diverse sketch inputs revealed 35.5-second average processing times and high-fidelity geometric reproduction, validating seamless Cluster platform integration. The system enables collaborative 3D content creation in social VR spaces, allowing users without modeling expertise to transform individual creative workflows into shared social experiences.

@inproceedings{kurai2025magicpen, author = {Kurai, Ryutaro and Hatada, Yuji and Hiraki, Takefumi and Hiroi, Yuichi}, title = {MagicPen: Interactive Sketch-to-3D Generation in Commertial Metaverse Platforms}, booktitle = {2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop)}, year = {2025}, volume = {}, number = {}, pages = {to appear}, keywords = {Metaverse;Sketch-based Modeling;3D Generative AI}, month = oct, } - ISMAR Workshop

Dynamic Gimmick Leaning for Navigation Agents in Social VR Through User-Agent DialogueIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop), Oct 2025

Dynamic Gimmick Leaning for Navigation Agents in Social VR Through User-Agent DialogueIn 2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop), Oct 2025User-generated interactive mechanisms (“gimmicks”) in metaverse platforms offer unique experiences, but their complexity hinders player exploration. Existing navigation agents, reliant on static metadata, cannot comprehend these dynamic gimmicks. We propose the “Conversational Knowledge Acquisition Loop,” a method for agents to dynamically learn gimmicks through player conversation. A preliminary study showed that our agent successfully acquired knowledge via conversation and applied it to solve analogous gimmicks.

@inproceedings{matsumoto2025dynamic, author = {Matsumoto, Atsuya and Yanagawa, Hikari and Hiroi, Yuichi and Hatada, Yuji and Narumi, Takuji and Hiraki, Takefumi}, title = {Dynamic Gimmick Leaning for Navigation Agents in Social VR Through User-Agent Dialogue}, booktitle = {2025 IEEE International Symposium on Mixed and Augmented Reality Abstracts and Workshops (ISMAR 2025 Workshop)}, year = {2025}, volume = {}, number = {}, pages = {to appear}, keywords = {Autonomous Agent;Virtual Reality;Embodied Conversational Agent;Social VR.}, month = oct, } - WHC WiP

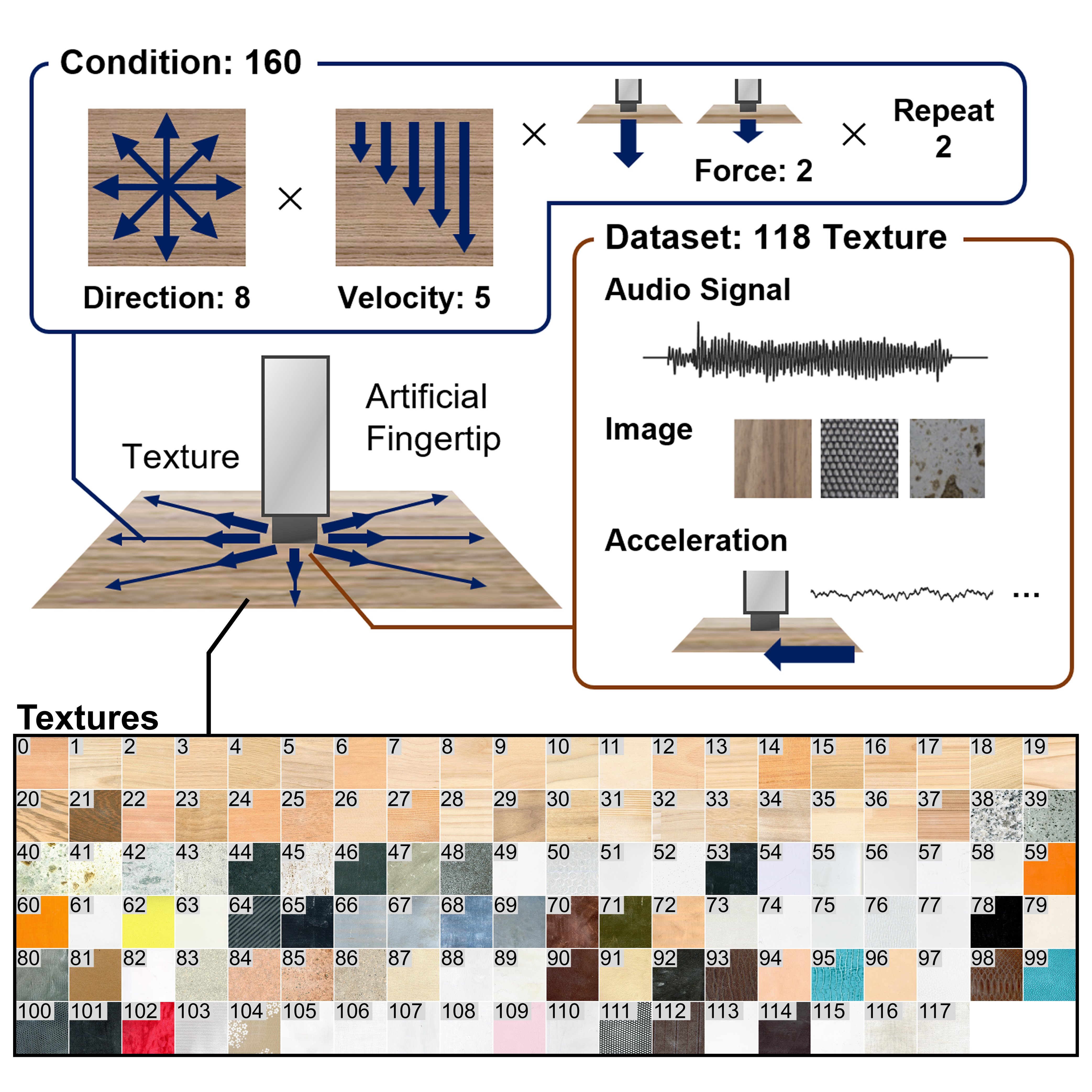

Cluster Haptic Texture Database: Haptic Texture Database with Controlled Sliding-Contact InteractionsIn 2025 IEEE World Haptics Conference Work in Progress (WHC WiP), Jul 2025

Cluster Haptic Texture Database: Haptic Texture Database with Controlled Sliding-Contact InteractionsIn 2025 IEEE World Haptics Conference Work in Progress (WHC WiP), Jul 2025Haptic sciences and technologies benefit greatly from comprehensive datasets that capture tactile stimuli under controlled, systematic conditions. However, existing haptic databases collect data through uncontrolled exploration, which hinders the systematic analysis of how motion parameters (e.g., motion direction and velocity) influence tactile perception. This paper introduces Cluster Haptic Texture Database, a multimodal dataset recorded using a 3-axis machine with an artificial finger to precisely control sliding velocity and direction. The dataset encompasses 118 textured surfaces across 9 material categories, with recordings at 5 velocity levels (20-60 mm/s) and 8 directions. Each surface was tested under 160 conditions, yielding 18,880 synchronized recordings of audio, acceleration, force, position, and visual data. Validation using convolutional neural networks demonstrates classification accuracies of 96% for texture recognition, 88.76% for velocity estimation, and 78.79% for direction estimation, confirming the dataset’s utility for machine learning applications. This resource enables research in haptic rendering, texture recognition algorithms, and human tactile perception mechanisms, supporting the development of realistic haptic interfaces for virtual reality systems and robotic applications.

@inproceedings{eguchi2025whc_wip, title = {Cluster Haptic Texture Database: Haptic Texture Database with Controlled Sliding-Contact Interactions}, author = {Eguchi, Michikuni and Hiroi, Yuichi and Hayase, Tomohiro and Hiraki, Takefumi}, booktitle = {2025 IEEE World Haptics Conference Work in Progress (WHC WiP)}, year = {2025}, organization = {IEEE}, month = jul, doi = {10.1109/VRW66409.2025.00288}, } - IEEE VR PosterAn implementation of MagicCraft: Generating Interactive 3D Objects and Their Behaviors from Text for Commercial Metaverse PlatformsRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquia-Hernandez, Hideki Uchiyama, and Kiyoshi KiyokawaIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Adjunct 2025, Mar 2025

Metaverse platforms are rapidly evolving to provide immersive spaces. However, the generation of dynamic and interactive 3D objects remains a challenge due to the need for advanced 3D modeling and programming skills. We present MagicCraft, a system that generates functional 3D objects from natural language prompts. MagicCraft uses generative AI models to manage the entire content creation pipeline: converting user text descriptions into images, transforming images into 3D models, predicting object behavior, and assigning necessary attributes and scripts. It also provides an interactive interface for users to refine generated objects by adjusting features like orientation, scale, seating positions, and grip points.

@inproceedings{kurai2025magiccraft-poster, author = {Kurai, Ryutaro and Hiraki, Takefumi and Hiroi, Yuichi and Hirao, Yutaro and Perusquia-Hernandez, Monica and Uchiyama, Hideki and Kiyokawa, Kiyoshi}, title = {An implementation of MagicCraft: Generating Interactive 3D Objects and Their Behaviors from Text for Commercial Metaverse Platforms}, booktitle = {2025 IEEE Conference on Virtual Reality and 3D User Interfaces Adjunct 2025}, year = {2025}, volume = {}, number = {}, pages = {1284-1285}, keywords = {Solid modeling;Three-dimensional displays;Metaverse;Generative AI;Natural languages;Refining;Pipelines;Programming;Predictive models;User interfaces;Metaverse;3D Object Generation;Generative AI;AI-Assisted Design}, month = mar, doi = {10.1109/VRW66409.2025.00288}, } - IEEE VR Workshop

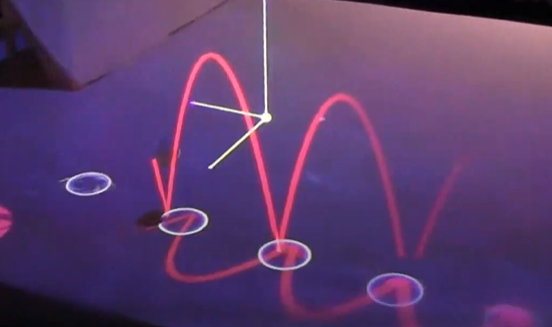

BeamStellar: Low-Latency, 6-DoF Head Tracking for Beaming Displays with Spatio-Temporal LED EncodingJonas Weigand, Yuichi Hiroi, and Yuta ItohIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2025

BeamStellar: Low-Latency, 6-DoF Head Tracking for Beaming Displays with Spatio-Temporal LED EncodingJonas Weigand, Yuichi Hiroi, and Yuta ItohIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2025Conventional augmented reality (AR) head-mounted displays (HMDs) require the integration of all components, including optics and processors, which presents significant barriers to practical implementation due to weight and heat generation concerns. While Beaming Displays address these challenges by offloading computing and projection functions to the environment, existing solutions leaving 6-degrees of freedom (6DoF) image projection with low motion-to-photon (M2P) latency. In this position paper, we introduce our trial BeamStellar, a Beaming Display system that integrates 6 DoF head tracking by combining spatio-temporally encoded LED patterns with a position-sensing detector. Through digital signal processing using an FPGA, the system aims a theoretical latency of less than 200 microseconds. This implementation alleviates the hardware burden on users, facilitating seamless AR experiences that seamlessly blend virtual content with the physical world.

@inproceedings{weigand2025stellar, author = {Weigand, Jonas and Hiroi, Yuichi and Itoh, Yuta}, title = {BeamStellar: Low-Latency, 6-DoF Head Tracking for Beaming Displays with Spatio-Temporal LED Encoding}, booktitle = {2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)}, year = {2025}, volume = {}, number = {}, pages = {to appear}, keywords = {Beaming Displays;Optical See-through Display;Low latency head tracking;Spatio-temporal Light pattern}, month = mar, doi = {10.1109/VRW66409.2025.00180}, } - IEEE VR Workshop

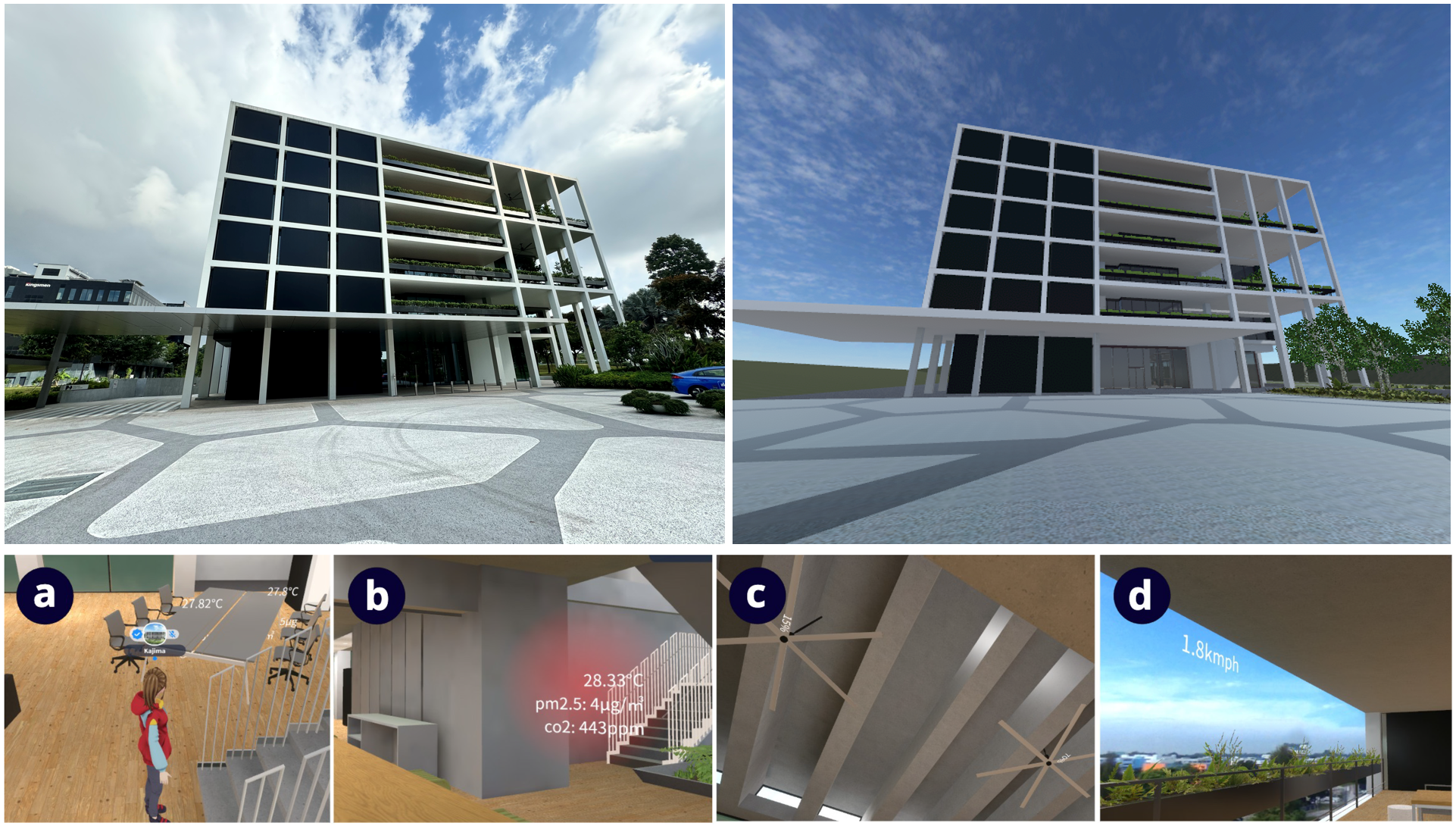

Development of Digital Twin Environment through Integration of Commercial Metaverse Platform and IoT Sensors of Smart BuildingYusuke Masubuchi, Takefumi Hiraki, Yuichi Hiroi, Masanori Ibara, Kazuki Matsutani, Megumi Zaizen, and Junya MoritaIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2025

Development of Digital Twin Environment through Integration of Commercial Metaverse Platform and IoT Sensors of Smart BuildingYusuke Masubuchi, Takefumi Hiraki, Yuichi Hiroi, Masanori Ibara, Kazuki Matsutani, Megumi Zaizen, and Junya MoritaIn 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2025The digital transformation of smart cities and workplaces requires effective integration of physical and cyber spaces, yet existing digital twin solutions remain limited in supporting real-time, multi-user collaboration. While metaverse platforms enable shared virtual experiences, they have not supported comprehensive integration of IoT sensors on physical spaces, especially for large-scale smart architectural environments. This paper presents a digital twin environment that integrates Kajima Corp.’s smart building facility "The GEAR" in Singapore with a commercial metaverse platform Cluster. Our system consists of three key components: a standardized IoT sensor platform, a real-time data relay system, and an environmental data visualization framework. Quantitative end-to-end latency measurements confirm the feasibility of our approach for real-world applications in large architectural spaces. The proposed framework enables new forms of collaboration that transcend spatial constraints, advancing the development of next-generation interactive environments.

@inproceedings{masubuchi2025digitaltwin, author = {Masubuchi, Yusuke and Hiraki, Takefumi and Hiroi, Yuichi and Ibara, Masanori and Matsutani, Kazuki and Zaizen, Megumi and Morita, Junya}, title = {Development of Digital Twin Environment through Integration of Commercial Metaverse Platform and IoT Sensors of Smart Building}, booktitle = {2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)}, year = {2025}, volume = {}, number = {}, pages = {852-855}, keywords = {Digital Twin;Metaverse Integration;Smart Building;IoT Sensors}, month = mar, doi = {10.1109/VRW66409.2025.00173} }

2024

- IEEE TVCG

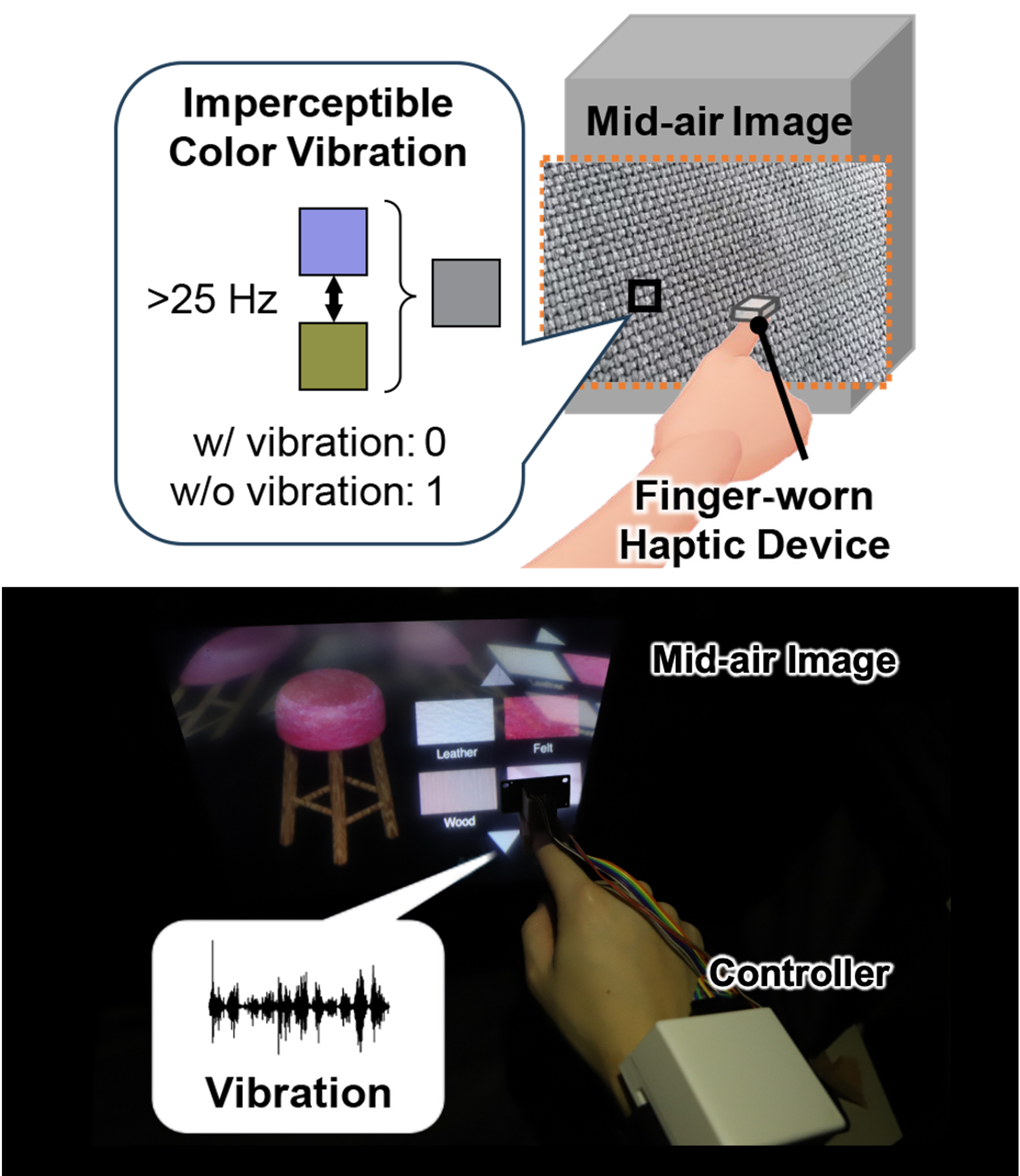

HaptoFloater: Visuo-Haptic Augmented Reality by Embedding Imperceptible Color Vibration Signals for Tactile Display Control in a Mid-Air ImageIEEE Transactions on Visualization and Computer Graphics, Nov 2024

HaptoFloater: Visuo-Haptic Augmented Reality by Embedding Imperceptible Color Vibration Signals for Tactile Display Control in a Mid-Air ImageIEEE Transactions on Visualization and Computer Graphics, Nov 2024We propose HaptoFloater, a low-latency mid-air visuo-haptic augmented reality (VHAR) system that utilizes imperceptible color vibrations. When adding tactile stimuli to the visual information of a mid-air image, the user should not perceive the latency between the tactile and visual information. However, conventional tactile presentation methods for mid-air images, based on camera-detected fingertip positioning, introduce latency due to image processing and communication. To mitigate this latency, we use a color vibration technique; humans cannot perceive the vibration when the display alternates between two different color stimuli at a frequency of 25 Hz or higher. In our system, we embed this imperceptible color vibration into the mid-air image formed by a micromirror array plate, and a photodiode on the fingertip device directly detects this color vibration to provide tactile stimulation. Thus, our system allows for the tactile perception of multiple patterns on a mid-air image in 59.5 ms. In addition, we evaluate the visual-haptic delay tolerance on a mid-air display using our VHAR system and a tactile actuator with a single pattern and faster response time. The results of our user study indicate a visual-haptic delay tolerance of 110.6 ms, which is considerably larger than the latency associated with systems using multiple tactile patterns.

@article{nagano2024haptofloater, author = {Nagano, Rina and Kinoshita, Takahiro and Hattori, Shingo and Hiroi, Yuichi and Itoh, Yuta and Hiraki, Takefumi}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {HaptoFloater: Visuo-Haptic Augmented Reality by Embedding Imperceptible Color Vibration Signals for Tactile Display Control in a Mid-Air Image}, year = {2024}, volume = {30}, number = {11}, pages = {7463-7472}, keywords = {Haptic interfaces;Vibrations;Visualization;Image color analysis;Actuators;Delays;Tactile sensors;Visuo-haptic displays;mid-air images;LCD displays;imperceptible color vibration}, doi = {10.1109/TVCG.2024.3456175}, issn = {1941-0506}, month = nov, } - IEEE TVCG

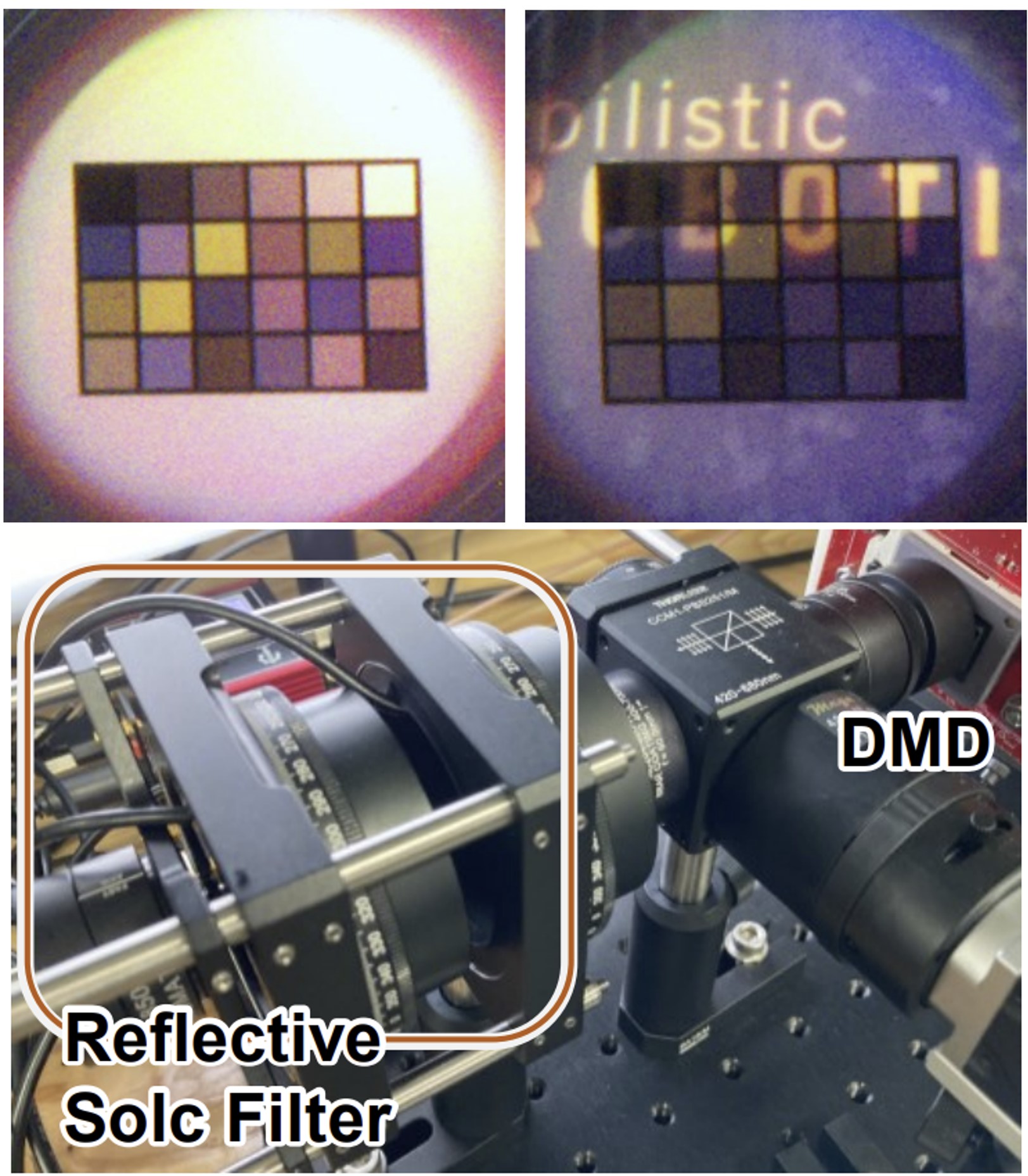

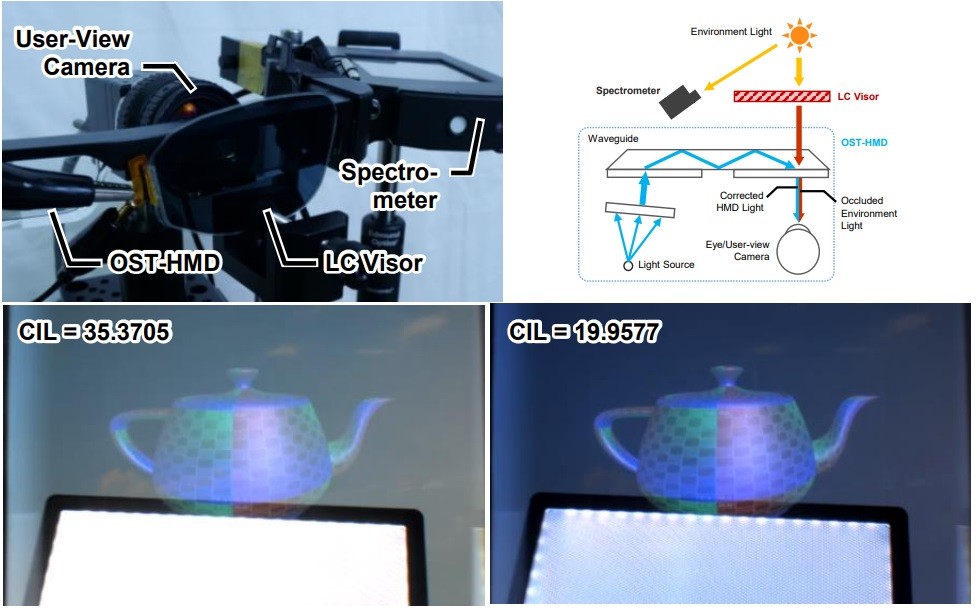

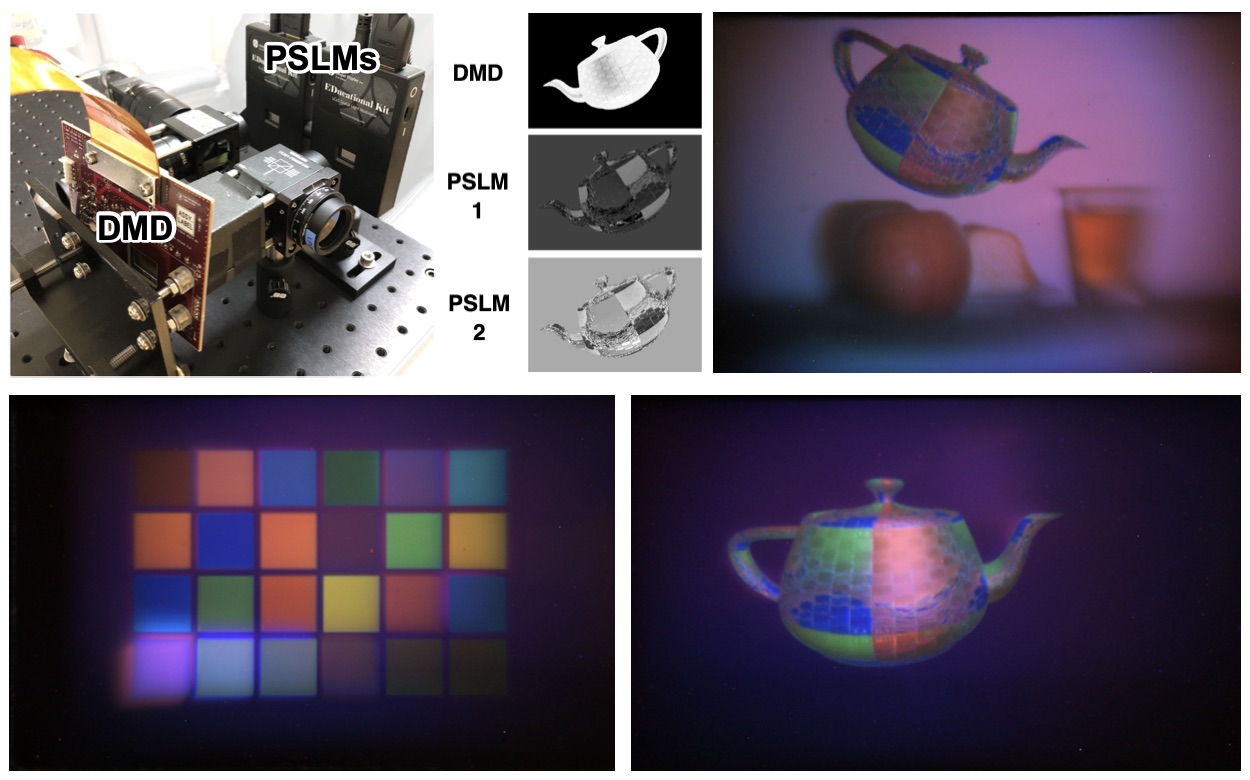

StainedSweeper: Compact, Variable-Intensity Light-Attenuation Display with Sweeping Tunable RetardersYuichi Hiroi, Takefumi Hiraki, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, May 2024

StainedSweeper: Compact, Variable-Intensity Light-Attenuation Display with Sweeping Tunable RetardersYuichi Hiroi, Takefumi Hiraki, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, May 2024Light Attenuation Displays (LADs) are a type of Optical See-Through Head-Mounted Display (OST-HMD) that present images by attenuating incoming light with a pixel-wise polarizing color filter. Although LADs can display images in bright environments, there is a trade-off between the number of Spatial Light Modulators (SLMs) and the color gamut and contrast that can be expressed, making it difficult to achieve both high-fidelity image display and a small form factor. To address this problem, we propose StainedSweeper, a LAD that achieves both the wide color gamut and the variable intensity with a single SLM. Our system synchronously controls a pixel-wise Digital Micromirror Device (DMD) and a nonpixel polarizing color filter to pass light when each pixel is the desired color. By sweeping this control at high speed, the human eye perceives images in a time-multiplexed, integrated manner. To achieve this, we develop the OST-HMD design using a reflective Solc filter as a polarized color filter and a color reproduction algorithm based on the optimization of the time-multiplexing matrix for the selected primary color filters. Our proof-of-concept prototype showed that our single SLM design can produce subtractive images with variable contrast and a wider color gamut than conventional LADs.

@article{hiroi2024stainedsweeper, author = {Hiroi, Yuichi and Hiraki, Takefumi and Itoh, Yuta}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {StainedSweeper: Compact, Variable-Intensity Light-Attenuation Display with Sweeping Tunable Retarders}, year = {2024}, volume = {30}, number = {5}, pages = {2682-2692}, keywords = {Image color analysis;Optical filters;Filtering algorithms;Light sources;Optical imaging;Optical reflection;Optical polarization;Light attenuation display;see-through display;augmented reality;time-multiplexing;polarized color filter}, doi = {10.1109/TVCG.2024.3372058}, issn = {1941-0506}, month = may, } - IEEE TVCG

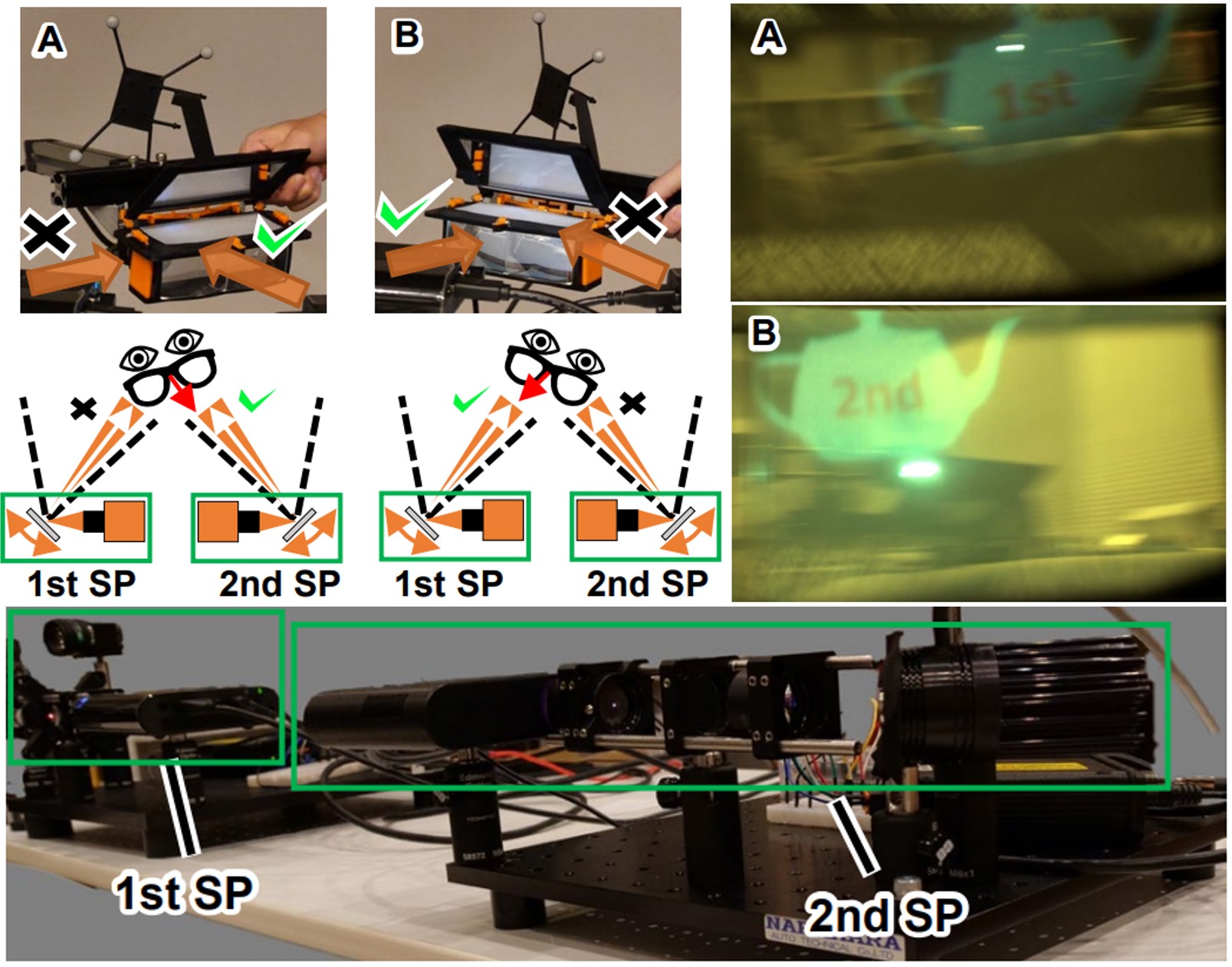

Towards Co-Operative Beaming Displays: Dual Steering Projectors for Extended Projection Volume and Head Orientation RangeHiroto Aoki, Takumi Tochimoto, Yuichi Hiroi, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, May 2024

Towards Co-Operative Beaming Displays: Dual Steering Projectors for Extended Projection Volume and Head Orientation RangeHiroto Aoki, Takumi Tochimoto, Yuichi Hiroi, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, May 2024Existing near-eye displays (NEDs) have trade-offs related to size, weight, computational resources, battery life, and body temperature. A recent paradigm, beaming display, addresses these trade-offs by separating the NED into a steering projector (SP) for image presentation and a passive headset worn by the user. However, the beaming display has issues with the projection area of a single SP and has severe limitations on the head orientation and pose that the user can move. In this study, we distribute dual steering projectors in the scene to extend the head orientation and pose of the beaming display by coordinating the dual projections on a passive headset. For cooperative control of each SP, we define a geometric model of the SPs and propose a calibration and projection control method designed for multiple projectors. We present implementations of the system along with evaluations showing that the precision and delay are 1.8 ∼ 5.7 mm and 14.46 ms, respectively, at a distance of about 1 m from the SPs. From this result, our prototype with multiple SPs can project images in the projection area (20 \textmm \times 30 \textmm) of the passive headset while extending the projectable head orientation. Furthermore, as applications of cooperative control by multiple SPs, we show the possibility of multiple users, improving dynamic range and binocular presentation.

@article{aoki2024dualbeaming, author = {Aoki, Hiroto and Tochimoto, Takumi and Hiroi, Yuichi and Itoh, Yuta}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {Towards Co-Operative Beaming Displays: Dual Steering Projectors for Extended Projection Volume and Head Orientation Range}, year = {2024}, volume = {30}, number = {5}, pages = {2309-2318}, keywords = {Mirrors;Cameras;Headphones;Calibration;Optical imaging;High-speed optical techniques;Head;Near-eye display;Augmented reality;Projectors}, doi = {10.1109/TVCG.2024.3372118}, issn = {1941-0506}, month = may, } - IEEE ISMAR

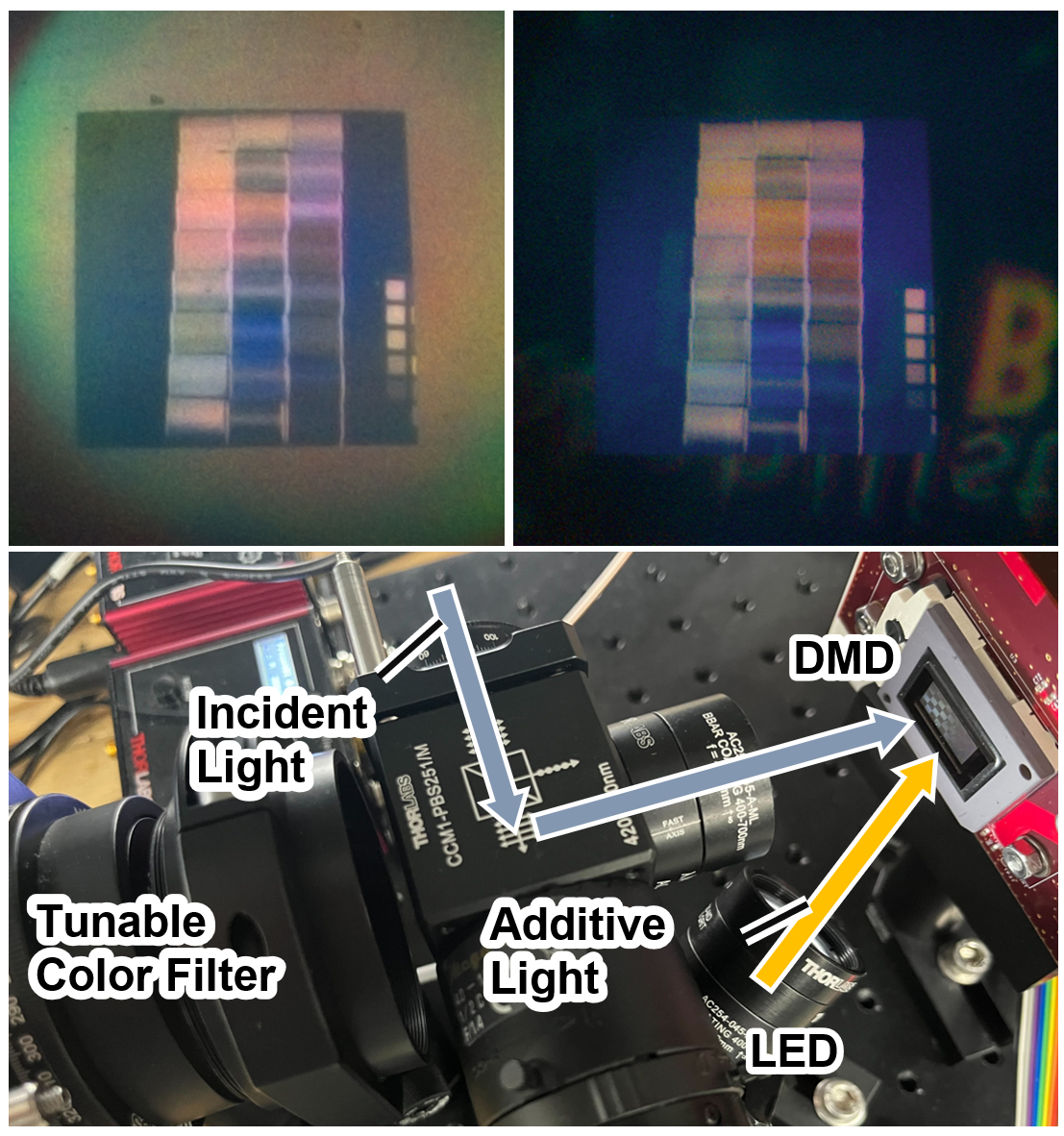

FactoredSweeper: Optical See-Through Display Integrating Light Attenuation and Addition with a Single Spatial Light ModulatorYuichi Hiroi, Takefumi Hiraki, and Yuta ItohIn 2024 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Oct 2024

FactoredSweeper: Optical See-Through Display Integrating Light Attenuation and Addition with a Single Spatial Light ModulatorYuichi Hiroi, Takefumi Hiraki, and Yuta ItohIn 2024 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Oct 2024Light Attenuation Displays (LADs), a subset of Optical See-Through Head-Mounted Displays (OST-HMDs), enable image display in bright environments by filtering incident light at the pixel level. Although recent methods have proposed single-DMD light attenuation, they do not consider additive color display and background compensation, limiting their applicability in real-world scenarios. We present FactoredSweeper, a single digital micromirror device (DMD) system that incorporates both light attenuation and addition. By synchronizing the DMD, color filter, and light source, our system generates an additive virtual image, light attenuation, and occlusion through time multiplexing. To produce the target image while compensating for the background, we optimize time-multiplexed binary DMD patterns and LED/color filter schedules using perceptually-driven non-negative matrix factorization. Simulations and prototypes demonstrate that our integrated attenuation-addition single-SLM system achieves superior dynamic range and perceptual image quality compared to conventional occlusion-capable OST-HMDs using grayscale occlusion masks.

- ISMAR DemoMetaGadget: IoT Framework for Event-Triggered Integration of User-Developed Devices into Commercial Metaverse PlatformsRyutaro Kurai, Yuichi Hiroi, and Takefumi HirakiIn 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) , Nov 2024

This demonstration introduces MetaGadget, an IoT framework designed to integrate user-developed devices into commercial metaverse platforms. Synchronizing virtual reality (VR) environments with physical devices has traditionally required a constant connection to VR clients, limiting flexibility and resource efficiency. MetaGadget overcomes these limitations by configuring user-developed devices as IoT units with server capabilities, supporting communication via HTTP protocols within the commercial metaverse platform, Cluster. This approach enables event-triggered device control without the need for persistent connections from metaverse clients. Through the demonstration, users will experience event-triggered interaction between VR and physical devices, as well as real-world device control through the VR space by multiple people. Our framework is expected to reduce technical barriers to integrating VR spaces and custom devices, contribute to interoperability, and increase resource efficiency through event-triggered connections.

- ISMAR Poster

Beaming Display Using Thin Holographic Waveguides for Wider Head Orientation Angle RangeIn 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Oct 2024

Beaming Display Using Thin Holographic Waveguides for Wider Head Orientation Angle RangeIn 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Oct 2024Augmented Reality (AR) glasses face fundamental challenges related to technical trade-offs. Emerging Beaming Displays (BDs) offer a compelling solution by separating the active and passive components. However, existing BD-based AR glasses have yet to achieve a thin and lightweight design with wide incident projection angles. This work proposes an eyepiece for BDs, including a holographic waveguide with tailored in- and out-coupling gratings. The proposed design aims to achieve a millimeter-thin form factor with a wide tolerance for incident angles, thus overcoming the limitations of existing designs. We have constructed proof-of-concept passive AR glasses prototypes, all approximately 2mm thick, including one in the form of conventional eyeglasses, and demonstrated an acceptable lateral angle of incidence of up to 90 degrees.

@inproceedings{itoh2024thinholographic, author = {Itoh, Yuta and Nakamura, Tomoya and Hiroi, Yuichi and Aksit, Kaan}, title = {Beaming Display Using Thin Holographic Waveguides for Wider Head Orientation Angle Range}, booktitle = {2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)}, year = {2024}, volume = {}, number = {}, pages = {475-476}, keywords = {Beaming display; Augmented reality; Near-eye display; waveguide; HOEs}, doi = {10.1109/ISMAR-Adjunct64951.2024.00138}, month = oct, } - arXiv

PanoTree: Autonomous Photo-Spot Explorer in Virtual Reality ScenesTomohiro Hayase, Sacha Braun, Hikari Yanagawa, Itsuki Orito, and Yuichi HiroiOct 2024

PanoTree: Autonomous Photo-Spot Explorer in Virtual Reality ScenesTomohiro Hayase, Sacha Braun, Hikari Yanagawa, Itsuki Orito, and Yuichi HiroiOct 2024@misc{hayase2024panotree, title = {PanoTree: Autonomous Photo-Spot Explorer in Virtual Reality Scenes}, author = {Hayase, Tomohiro and Braun, Sacha and Yanagawa, Hikari and Orito, Itsuki and Hiroi, Yuichi}, year = {2024}, eprint = {2405.17136}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } - SIGGRAPH Poster

Measurement of the Imperceptible Threshold for Color Vibration Pairs Selected by using MacAdam EllipseShingo Hattori, Yuichi Hiroi, and Takefumi HirakiIn ACM SIGGRAPH 2024 Posters, Denver, CO, USA, Oct 2024

Measurement of the Imperceptible Threshold for Color Vibration Pairs Selected by using MacAdam EllipseShingo Hattori, Yuichi Hiroi, and Takefumi HirakiIn ACM SIGGRAPH 2024 Posters, Denver, CO, USA, Oct 20241st Place in SIGGRAPH 2024 Student Research Competition for Undergraduate Work

We propose an efficient method for searching for color vibration pairs that are imperceptible to the human eye based on the MacAdam ellipse, an experimentally determined color-difference range that is indistinguishable to the human eye. We created color pairs by selecting eight colors within the sRGB color space specified by the ellipse, and conducted experiments to confirm the threshold of the amplitude of color vibration amplitude at which flicker becomes imperceptible to the human eye. The experimental results indicate a general guideline for acceptable amplitudes for pair selection.

@inproceedings{hattori2024macadam, author = {Hattori, Shingo and Hiroi, Yuichi and Hiraki, Takefumi}, title = {Measurement of the Imperceptible Threshold for Color Vibration Pairs Selected by using MacAdam Ellipse}, year = {2024}, isbn = {9798400705168}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3641234.3671041}, doi = {10.1145/3641234.3671041}, booktitle = {ACM SIGGRAPH 2024 Posters}, articleno = {68}, numpages = {2}, keywords = {MacAdam ellipse, color perception, imperceptible color vibration}, location = {Denver, CO, USA}, series = {SIGGRAPH '24}, } - IEEE VR Workshop

Design and Implementation of Agent APIs for Large-Scale Social VR PlatformsRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquia-Hernandez, Hideaki Uchiyama, and Kiyoshi KiyokawaIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Mar 2024

Design and Implementation of Agent APIs for Large-Scale Social VR PlatformsRyutaro Kurai, Takefumi Hiraki, Yuichi Hiroi, Yutaro Hirao, Monica Perusquia-Hernandez, Hideaki Uchiyama, and Kiyoshi KiyokawaIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Mar 2024Implementing an autonomous agent on a social VR platform where many users share space requires diverse information. In particular, it is required to recognize the distance from other users, their orientation toward each other, the avatar’s pose, and text and voice messages, and to behave accordingly. This paper proposes an API to obtain the above information on “Cluster,” a multi-device social VR platform in operation, and an agent that uses the API. We have implemented this API using a network proxy. The agent using this API can connect to ChatGPT [7] and have a conversation in real time. We measured the latency required for the conversation and confirmed that the response time was about 1 second.

@inproceedings{kurai2024agentapi, author = {Kurai, Ryutaro and Hiraki, Takefumi and Hiroi, Yuichi and Hirao, Yutaro and Perusquia-Hernandez, Monica and Uchiyama, Hideaki and Kiyokawa, Kiyoshi}, booktitle = { 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) }, title = {{ Design and Implementation of Agent APIs for Large-Scale Social VR Platforms }}, year = {2024}, volume = {}, issn = {}, pages = {584-587}, keywords = {Three-dimensional displays;Text recognition;Oral communication;Virtual reality;Speech recognition;User interfaces;Software}, doi = {10.1109/VRW62533.2024.00112}, url = {https://doi.ieeecomputersociety.org/10.1109/VRW62533.2024.00112}, publisher = {IEEE Computer Society}, address = {Los Alamitos, CA, USA}, month = mar, }

2023

- IEEE TVCG

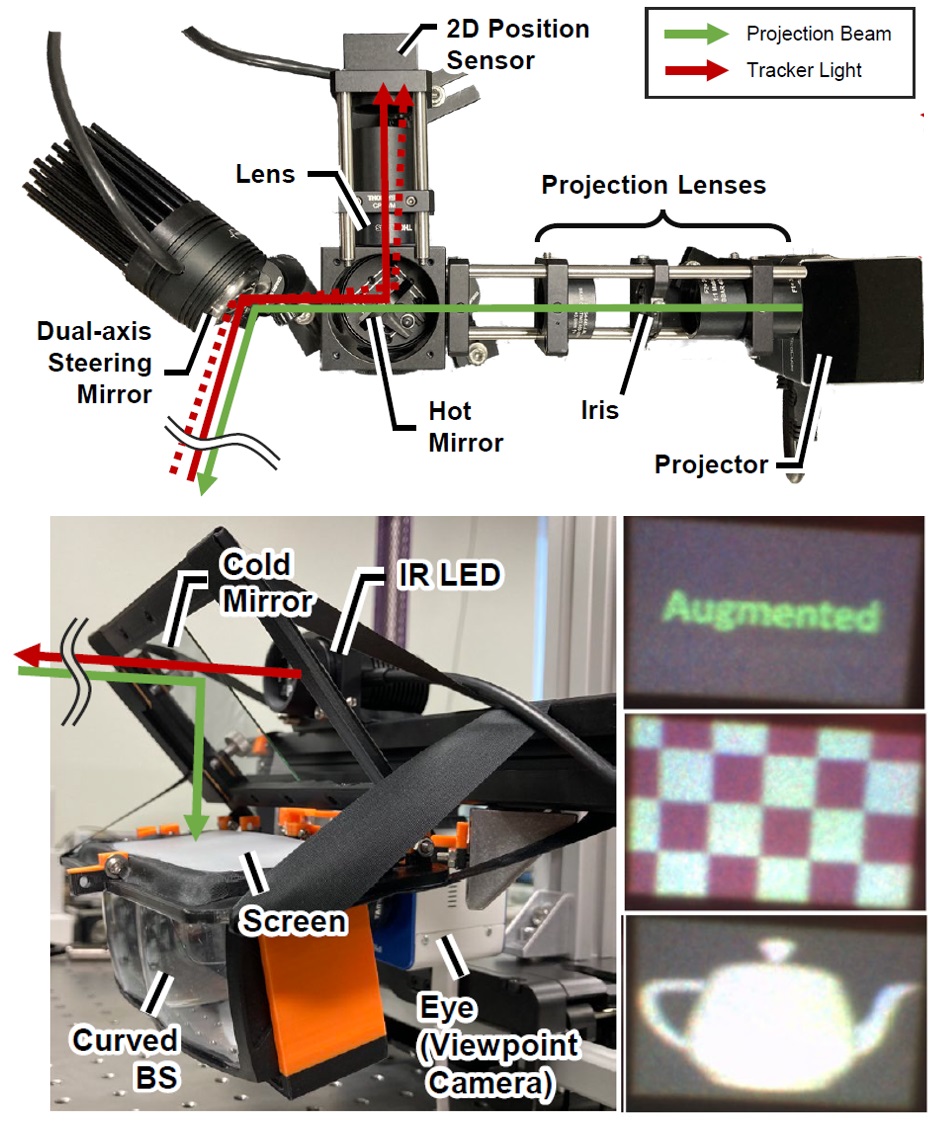

Low-Latency Beaming Display: Implementation of Wearable, 133 μs Motion-to-Photon Latency Near-Eye DisplayYuichi Hiroi , Akira Watanabe, Yuri Mikawa, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, Nov 2023

Low-Latency Beaming Display: Implementation of Wearable, 133 μs Motion-to-Photon Latency Near-Eye DisplayYuichi Hiroi , Akira Watanabe, Yuri Mikawa, and Yuta ItohIEEE Transactions on Visualization and Computer Graphics, Nov 2023This paper presents a low-latency Beaming Display system with a 133 μ\mathrms motion-to-photon (M2P) latency, the delay from head motion to the corresponding image motion. The Beaming Display represents a recent near-eye display paradigm that involves a steerable remote projector and a passive wearable headset. This system aims to overcome typical trade-offs of Optical See-Through Head-Mounted Displays (OST-HMDs), such as weight and computational resources. However, since the Beaming Display projects a small image onto a moving, distant viewpoint, M2P latency significantly affects displacement. To reduce M2P latency, we propose a low-latency Beaming Display system that can be modularized without relying on expensive high-speed devices. In our system, a 2D position sensor, which is placed coaxially on the projector, detects the light from the IR-LED on the headset and generates a differential signal for tracking. An analog closed-loop control of the steering mirror based on this signal continuously projects images onto the headset. We have implemented a proof-of-concept prototype, evaluated the latency and the augmented reality experience through a user-perspective camera, and discussed the limitations and potential improvements of the prototype.

@article{hiroi2023lowlatency, author = {Hiroi, Yuichi and Watanabe, Akira and Mikawa, Yuri and Itoh, Yuta}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {Low-Latency Beaming Display: Implementation of Wearable, 133 μs Motion-to-Photon Latency Near-Eye Display}, year = {2023}, volume = {29}, number = {11}, pages = {4761-4771}, keywords = {Mirrors;Low latency communication;Headphones;Head;Cameras;Tracking;Two dimensional displays;Low-Latency Display;Beaming Display;Motion-to-Photon Latency;Lateral-effect Photodiodes}, doi = {10.1109/TVCG.2023.3320212}, issn = {1941-0506}, month = nov, } - ACM UIST

Telextiles: End-to-end Remote Transmission of Fabric Tactile SensationIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, San Francisco, CA, USA, Nov 2023

Telextiles: End-to-end Remote Transmission of Fabric Tactile SensationIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, San Francisco, CA, USA, Nov 2023The tactile sensation of textiles is critical in determining the comfort of clothing. For remote use, such as online shopping, users cannot physically touch the textile of clothes, making it difficult to evaluate its tactile sensation. Tactile sensing and actuation devices are required to transmit the tactile sensation of textiles. The sensing device needs to recognize different garments, even with hand-held sensors. In addition, the existing actuation device can only present a limited number of known patterns and cannot transmit unknown tactile sensations of textiles. To address these issues, we propose Telextiles, an interface that can remotely transmit tactile sensations of textiles by creating a latent space that reflects the proximity of textiles through contrastive self-supervised learning. We confirm that textiles with similar tactile features are located close to each other in the latent space through a two-dimensional plot. We then compress the latent features for known textile samples into the 1D distance and apply the 16 textile samples to the rollers in the order of the distance. The roller is rotated to select the textile with the closest feature if an unknown textile is detected.

@inproceedings{kitagishi2023telextiles, author = {Kitagishi, Takekazu and Hiroi, Yuichi and Watanabe, Yuna and Itoh, Yuta and Rekimoto, Jun}, title = {Telextiles: End-to-end Remote Transmission of Fabric Tactile Sensation}, year = {2023}, isbn = {9798400701320}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3586183.3606764}, doi = {10.1145/3586183.3606764}, booktitle = {Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology}, articleno = {67}, numpages = {10}, keywords = {Haptic feedback, Machine learning, Passive haptic feedback, Self supervised learning, Tactile Display, Tactile perception, Texture, Texture perception, Texture recognition}, location = {San Francisco, CA, USA}, series = {UIST '23}, } - ACM VRST

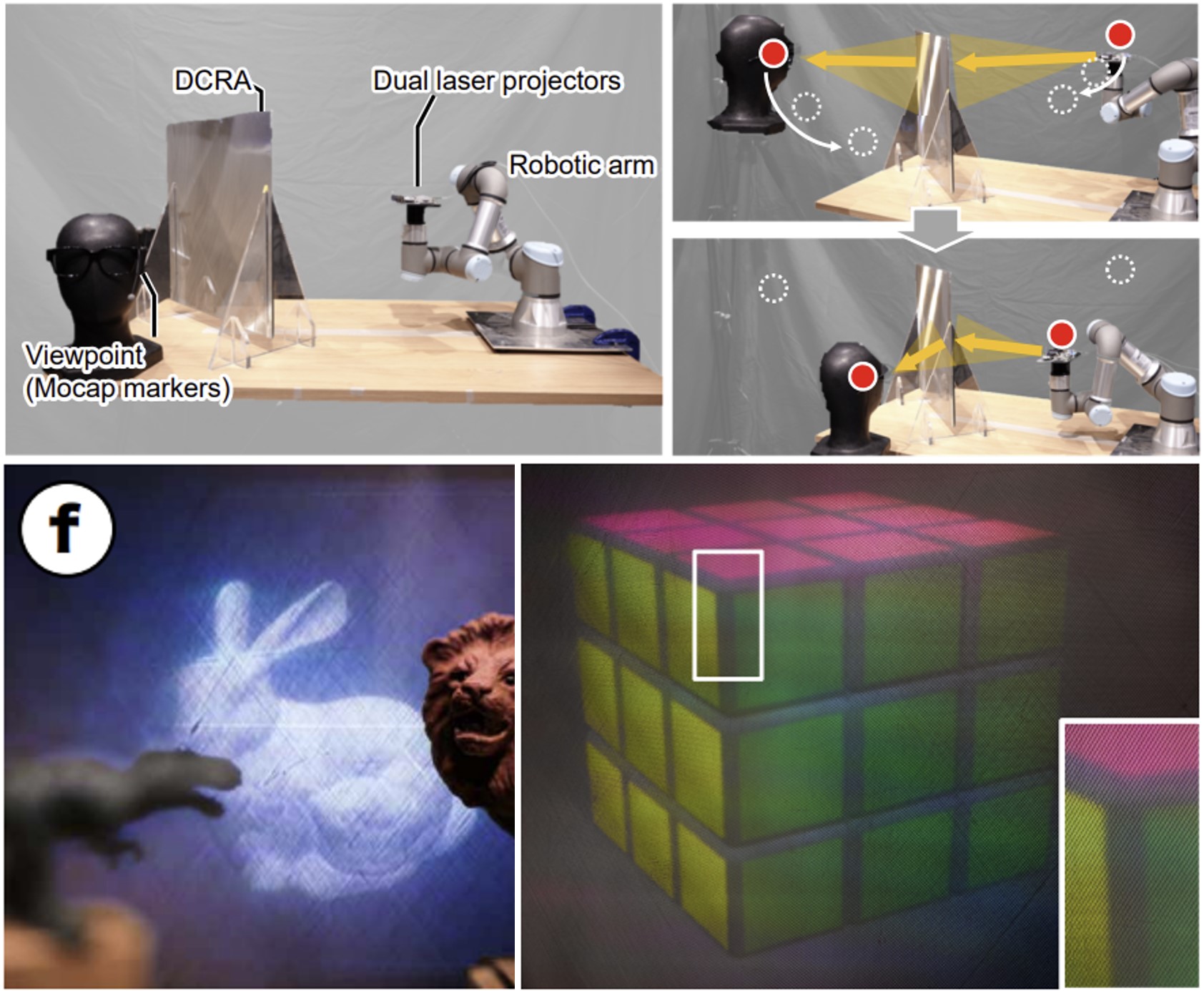

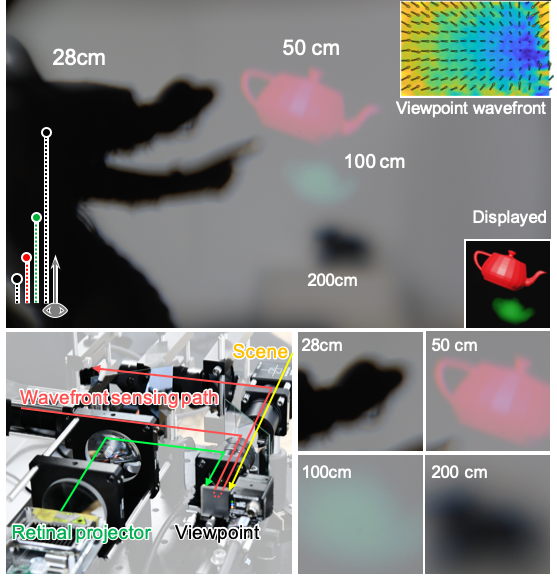

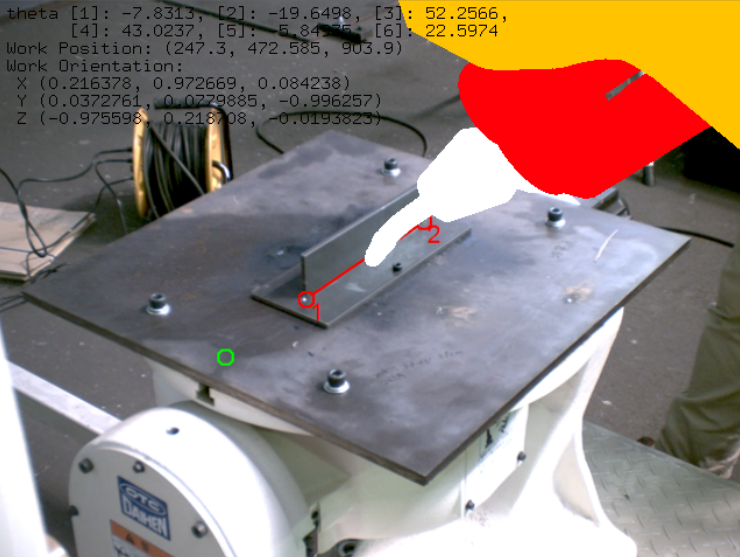

Retinal Homing Display: Head-Tracking Auto-stereoscopic Retinal Projection DisplayHiroto Aoki, Yuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, Christchurch, New Zealand, Nov 2023

Retinal Homing Display: Head-Tracking Auto-stereoscopic Retinal Projection DisplayHiroto Aoki, Yuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, Christchurch, New Zealand, Nov 2023This paper introduces Retinal Homing Display, which presents focus-free stereoscopic images via retinal projection, thus eliminating the need for the user to wear additional equipment. Traditional 3D displays, typically classified as either naked-eye stereoscopic or wearable, present inherent challenges: the former involves a compromise between resolution and accurate depth perception, while the latter imposes an additional burden on the user. Our proposed display employs optical and mechanical mechanisms to converge projector light at the user’s pupil center, simultaneously tracking eye movements. This lets the user perceive focus-free, high-resolution stereoscopic images without wearable equipment. We implemented a proof-of-concept system utilizing a robotic arm and a Dihedral Corner Reflector Array (DCRA), subsequently evaluating image quality and its eyebox. Finally, we discuss the limitations of the current prototype and outline potential directions for future research.

@inproceedings{aoki2023retinal, author = {Aoki, Hiroto and Hiroi, Yuichi and Itoh, Yuta and Rekimoto, Jun}, title = {Retinal Homing Display: Head-Tracking Auto-stereoscopic Retinal Projection Display}, year = {2023}, isbn = {9798400703287}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3611659.3615715}, doi = {10.1145/3611659.3615715}, booktitle = {Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology}, articleno = {13}, numpages = {10}, keywords = {autostereoscopic display, motion-following display, retinal projection}, location = {Christchurch, New Zealand}, series = {VRST '23}, } - IEEE VR

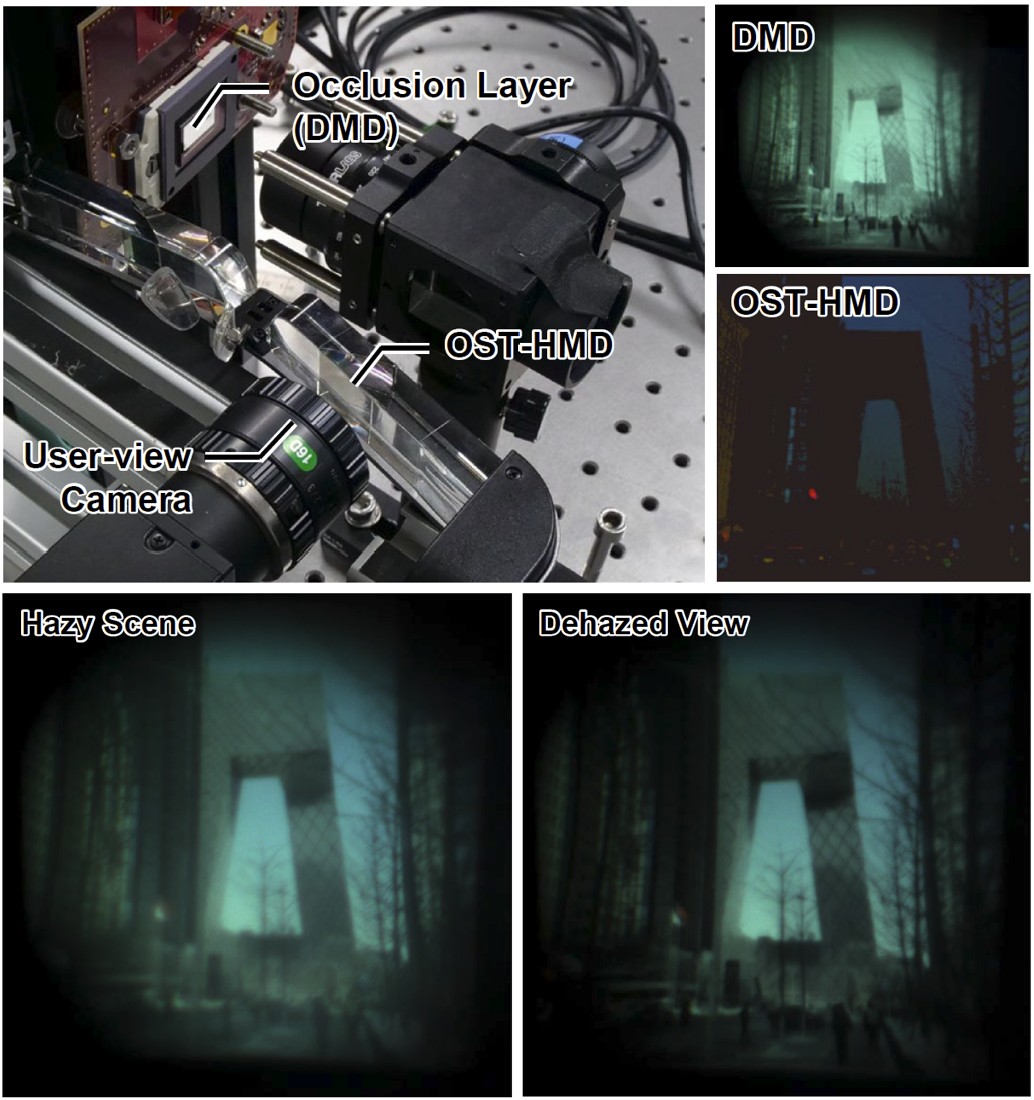

A Compact Photochromic Occlusion Capable See-through Display with Holographic LensesChun-Wei Ooi, Yuichi Hiroi, and Yuta ItohIn 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Mar 2023

A Compact Photochromic Occlusion Capable See-through Display with Holographic LensesChun-Wei Ooi, Yuichi Hiroi, and Yuta ItohIn 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Mar 2023Occlusion is a crucial visual element in optical see-through (OST) augmented reality, however, implementing occlusion in OST displays while addressing various design trade-offs is a difficult problem. In contrast to the traditional method of using spatial light modulators (SLMs) for the occlusion mask, using photochromic materials as occlusion masks can effectively eliminate diffraction artifacts in see-through views due to the lack of electronic pixels, thus providing superior see-through image quality. However, this design requires UV illumination to activate the photochromic mate-rial, which traditionally requires multiple SLMs, resulting in a larger form factor for the system. This paper presents a compact photochromic occlusion-capable OST design using multilayer, wavelength-dependent holographic optical lenses (HOLs). Our approach employs a single digital mi-cromirror display (DMD) to form both the occlusion mask with UV light and a virtual image with visible light in a time-multiplexed man-ner. We demonstrate our proof-of-concept system on a bench-top setup and assess the appearance and contrasts of the displayed image. We also suggest potential improvements for current prototypes to encourage the community to explore this occlusion approach.

@inproceedings{ooi2023photochromic, author = {Ooi, Chun-Wei and Hiroi, Yuichi and Itoh, Yuta}, booktitle = {2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR)}, title = {A Compact Photochromic Occlusion Capable See-through Display with Holographic Lenses}, year = {2023}, volume = {}, number = {}, pages = {237-242}, keywords = {Visualization;Optical diffraction;Three-dimensional displays;Optical design;Prototypes;Holography;User interfaces;Human-centered computing-Human computer interaction (HCI)-Interaction paradigms-Mixed / augmented reality;Human-centered computing-Communication hardware;interfaces and storage-Displays and imagers}, doi = {10.1109/VR55154.2023.00039}, issn = {2642-5254}, month = mar, } - ISMAR PosterDual Beaming Display for Extended Head Orientation and Projection VolumeTakumi Tochimoto, Yuichi Hiroi, and Yuta ItohIn 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Oct 2023

Existing near-eye displays (NEDs) come with various tradeoffs, including size, weight, and battery life. The new "beaming display" model attempts to address these by splitting the NED into a steering projector (SP) and a passive headset. However, it has limitations in terms of projection area and user head movement. In this research, we utilize two steering projectors to expand the beaming display’s capabilities. We introduce a geometric model and a control method specifically for multiple projectors. Tests indicate an accuracy of 3-19mm and a delay of 14.46 ms from a 1m distance to the SPs, allowing projection onto a 20mm x 30mm area of the headset. This method not only expands head movement possibilities but also shows potential for multiple users and improved dynamic range and binocular presentation.

@inproceedings{tochimoto2023dualbeaming, author = {Tochimoto, Takumi and Hiroi, Yuichi and Itoh, Yuta}, booktitle = {2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)}, title = {Dual Beaming Display for Extended Head Orientation and Projection Volume}, year = {2023}, volume = {}, number = {}, pages = {377-378}, keywords = {Headphones;Design methodology;Geometric modeling;Display systems;Focusing;Dynamic range;Delays;Human-centered computing;Visualization;Visualization techniques;Treemaps; Human-centered computing;Visualization design and evaluation methods}, doi = {10.1109/ISMAR-Adjunct60411.2023.00081}, issn = {2771-1110}, month = oct, } - AHs Poster

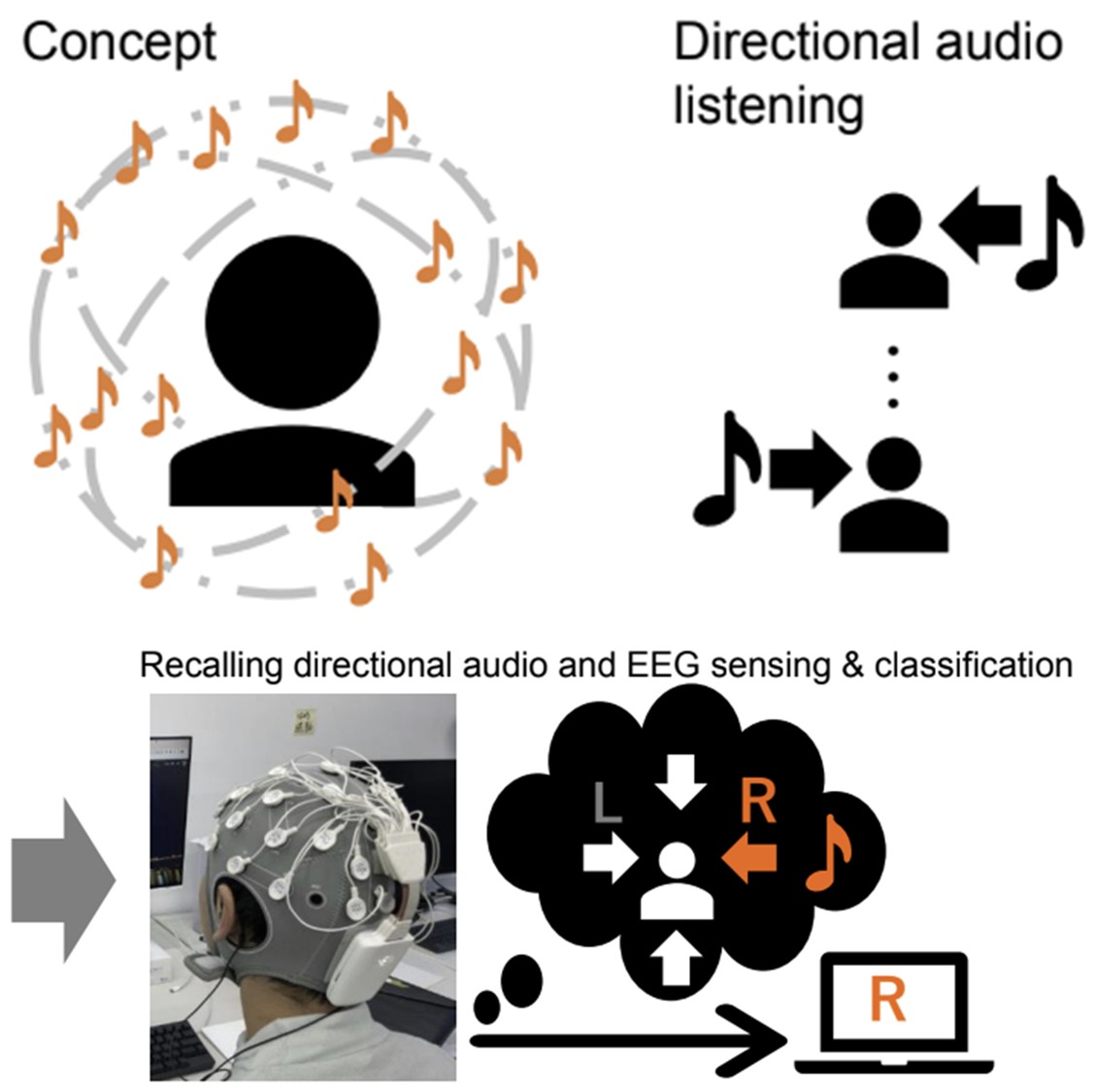

Brain-Computer Interface using Directional Auditory PerceptionYuto Koike, Yuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the Augmented Humans International Conference 2023, Glasgow, United Kingdom, Oct 2023

Brain-Computer Interface using Directional Auditory PerceptionYuto Koike, Yuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the Augmented Humans International Conference 2023, Glasgow, United Kingdom, Oct 2023Best Poster Honorable Mention

We investigate the potential of brain-computer interface (BCI) using electroencephalogram (EEG) induced by listening (or recalling) auditory stimuli of different directions. In the initial attempt, we apply a time series classification model based on deep learning to the EEG to demonstrate whether each EEG can be classified by recognizing binary (left or right) auditory directions. The results showed high classification accuracy when trained and tested on the same users. Discussion is provided to further explore this topic.

@inproceedings{koike2023braincomputer, author = {Koike, Yuto and Hiroi, Yuichi and Itoh, Yuta and Rekimoto, Jun}, title = {Brain-Computer Interface using Directional Auditory Perception}, year = {2023}, isbn = {9781450399845}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3582700.3583713}, doi = {10.1145/3582700.3583713}, booktitle = {Proceedings of the Augmented Humans International Conference 2023}, pages = {342–345}, numpages = {4}, keywords = {BCI, BMI, Brain-computer Interface, Brain-machine Interface, Directional Auditory Sensation, EEG, Electroencephalogram, Electroencephalography}, location = {Glasgow, United Kingdom}, series = {AHs '23}, }

2022

- Opt. Exp.

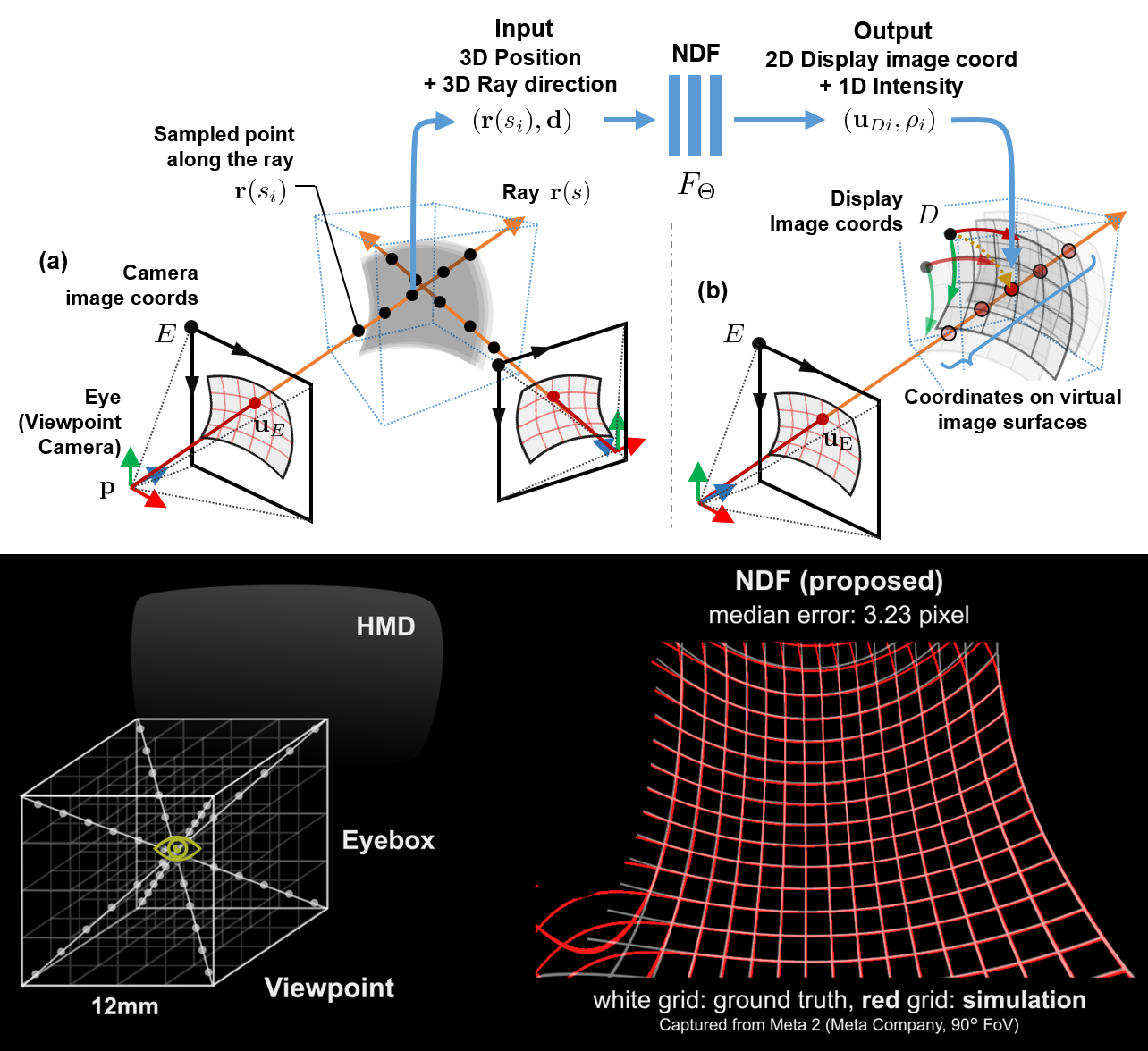

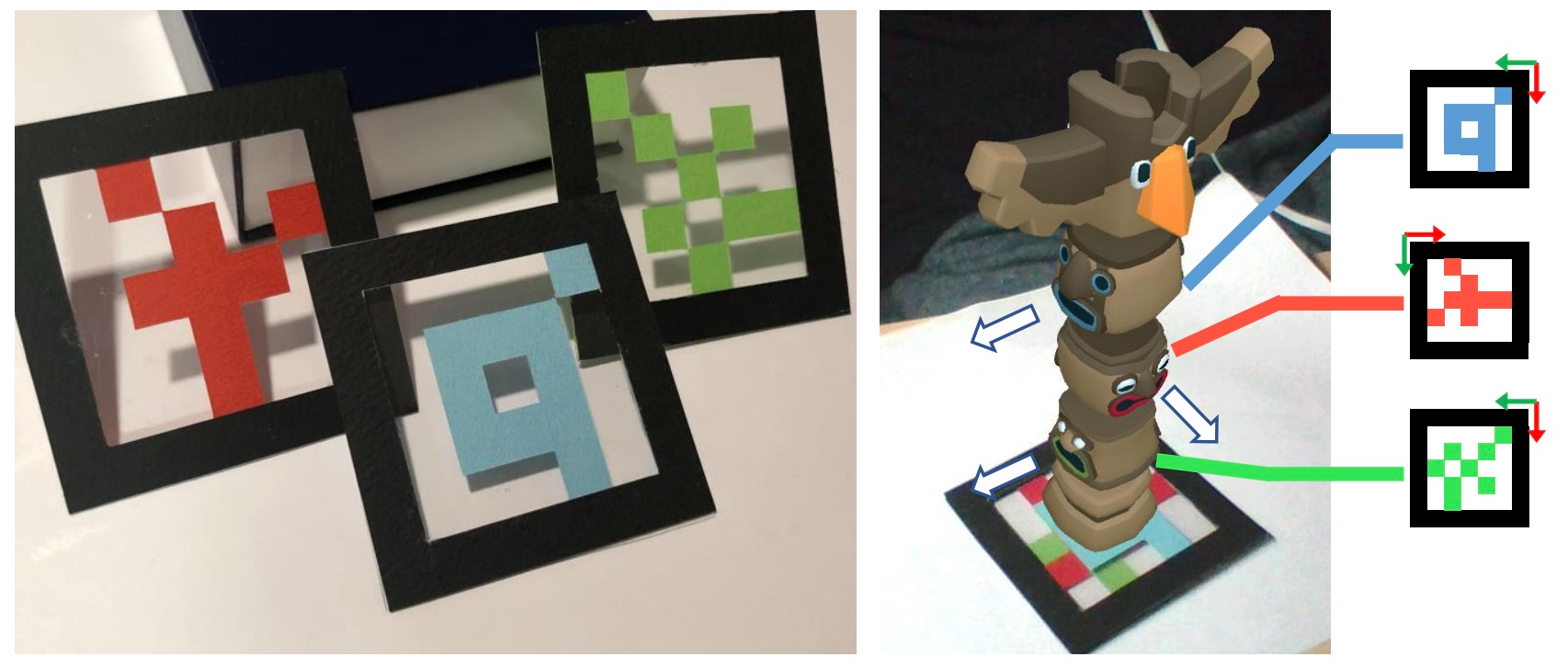

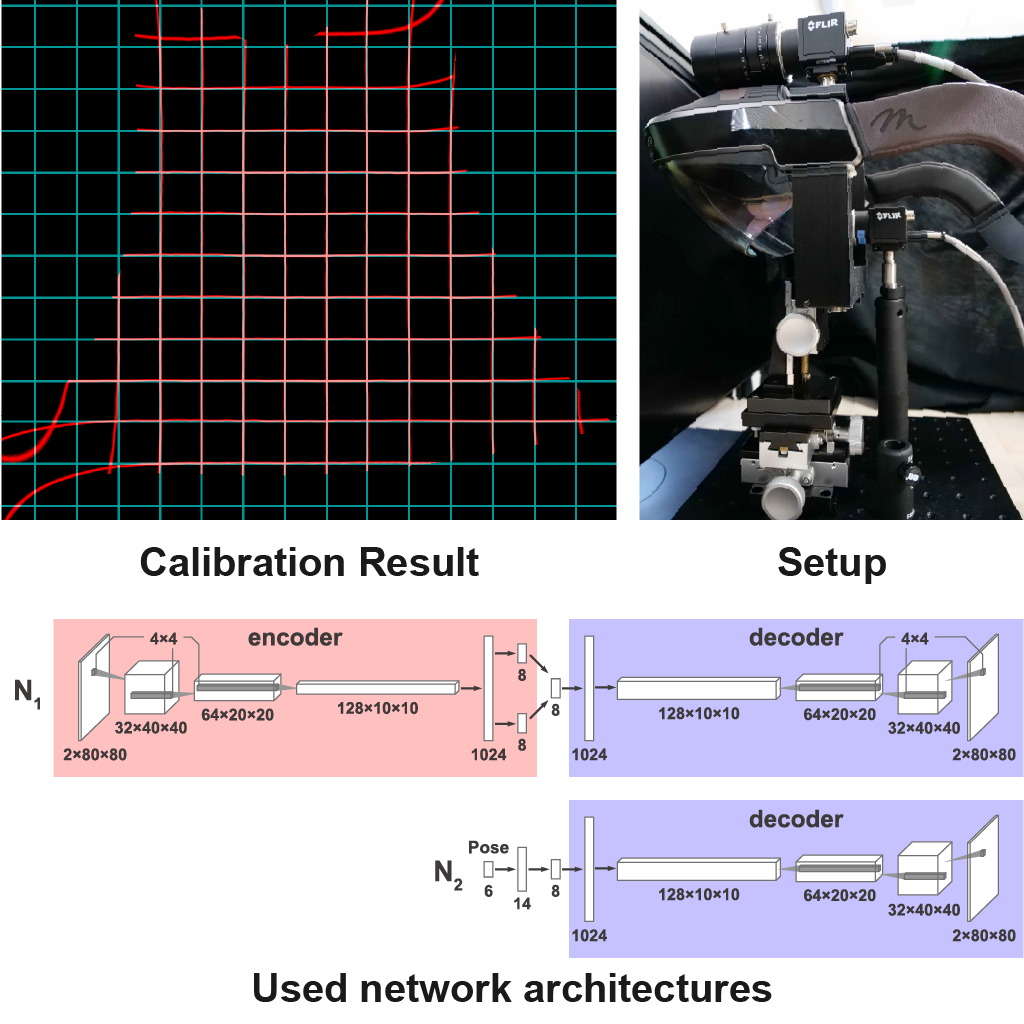

Neural distortion fields for spatial calibration of wide field-of-view near-eye displaysYuichi Hiroi, Kiyosato Someya, and Yuta ItohOpt. Express, Oct 2022

Neural distortion fields for spatial calibration of wide field-of-view near-eye displaysYuichi Hiroi, Kiyosato Someya, and Yuta ItohOpt. Express, Oct 2022Japan’s Leading Optics Research in 2022 (Top 30), Optical Society of Japan

We propose a spatial calibration method for wide field-of-view (FoV) near-eye displays (NEDs) with complex image distortions. Image distortions in NEDs can destroy the reality of the virtual object and cause sickness. To achieve distortion-free images in NEDs, it is necessary to establish a pixel-by-pixel correspondence between the viewpoint and the displayed image. Designing compact and wide-FoV NEDs requires complex optical designs. In such designs, the displayed images are subject to gaze-contingent, non-linear geometric distortions, which explicit geometric models can be difficult to represent or computationally intensive to optimize. To solve these problems, we propose neural distortion field (NDF), a fully-connected deep neural network that implicitly represents display surfaces complexly distorted in spaces. NDF takes spatial position and gaze direction as input and outputs the display pixel coordinate and its intensity as perceived in the input gaze direction. We synthesize the distortion map from a novel viewpoint by querying points on the ray from the viewpoint and computing a weighted sum to project output display coordinates into an image. Experiments showed that NDF calibrates an augmented reality NED with 90° FoV with about 3.23 pixel (5.8 arcmin) median error using only 8 training viewpoints. Additionally, we confirmed that NDF calibrates more accurately than the non-linear polynomial fitting, especially around the center of the FoV.

@article{hiroi2022neural, author = {Hiroi, Yuichi and Someya, Kiyosato and Itoh, Yuta}, journal = {Opt. Express}, keywords = {Image metrics; Lens design; Near eye displays; Optical aberration; Optical systems; Systems design}, number = {22}, pages = {40628--40644}, publisher = {Optica Publishing Group}, title = {Neural distortion fields for spatial calibration of wide field-of-view near-eye displays}, volume = {30}, month = oct, year = {2022}, url = {https://opg.optica.org/oe/abstract.cfm?URI=oe-30-22-40628}, doi = {10.1364/OE.472288}, } - ACM VRST

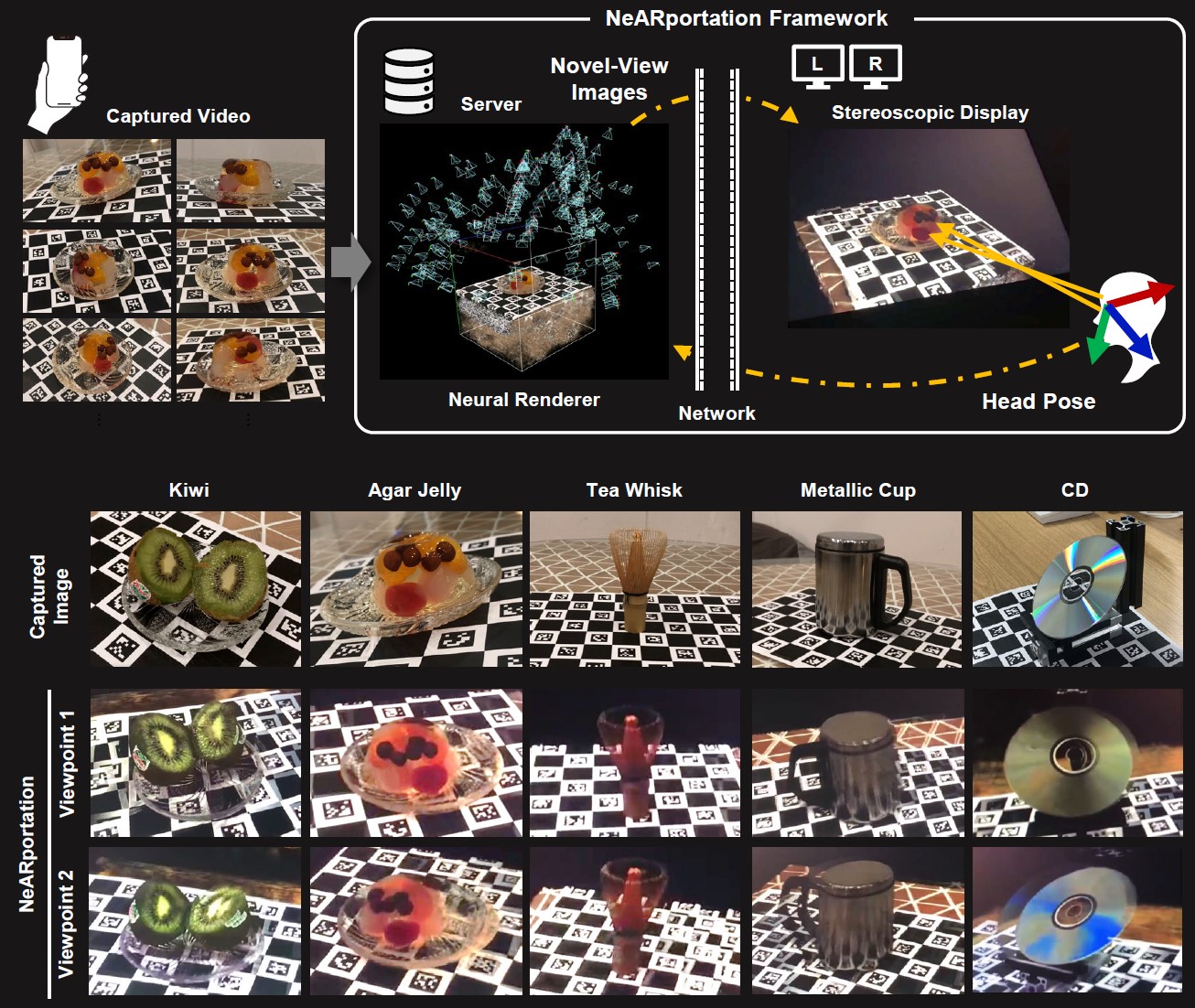

NeARportation: A Remote Real-time Neural Rendering FrameworkYuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the 28th ACM Symposium on Virtual Reality Software and Technology, Tsukuba, Japan, Oct 2022

NeARportation: A Remote Real-time Neural Rendering FrameworkYuichi Hiroi, Yuta Itoh, and Jun RekimotoIn Proceedings of the 28th ACM Symposium on Virtual Reality Software and Technology, Tsukuba, Japan, Oct 2022While presenting a photorealistic appearance plays a major role in immersion in Augmented Virtuality environment, displaying that of real objects remains a challenge. Recent developments in photogrammetry have facilitated the incorporation of real objects into virtual space. However, reproducing complex appearances, such as subsurface scattering and transparency, still requires a dedicated environment for measurement and possesses a trade-off between rendering quality and frame rate. Our NeARportation framework combines server–client bidirectional communication and neural rendering to resolve these trade-offs. Neural rendering on the server receives the client’s head posture and generates a novel-view image with realistic appearance reproduction that is streamed onto the client’s display. By applying our framework to a stereoscopic display, we confirm that it can display a high-fidelity appearance on full-HD stereo videos at 35-40 frames per second (fps) according to the user’s head motion.

@inproceedings{hiroi2022nearportation, author = {Hiroi, Yuichi and Itoh, Yuta and Rekimoto, Jun}, title = {NeARportation: A Remote Real-time Neural Rendering Framework}, year = {2022}, isbn = {9781450398893}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3562939.3565616}, doi = {10.1145/3562939.3565616}, booktitle = {Proceedings of the 28th ACM Symposium on Virtual Reality Software and Technology}, articleno = {23}, numpages = {5}, keywords = {remote rendering, real-time rendering, neural rendering, augmented virtuality, appearance reproduction}, location = {Tsukuba, Japan}, series = {VRST '22}, } - IEEE ISMAR

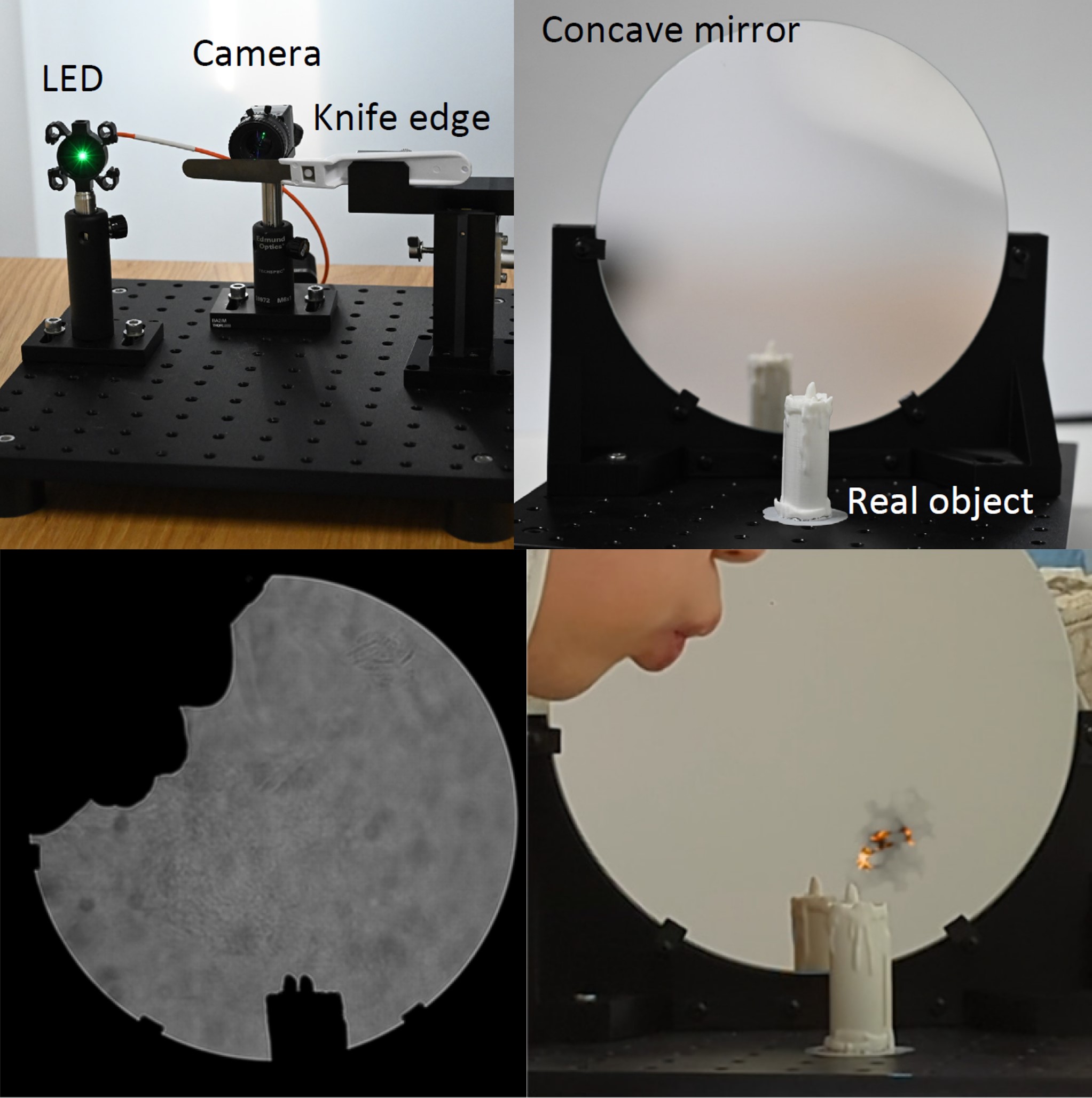

Towards Spatial Airflow Interaction: Schlieren Imaging for Augmented RealityZhang Zhibin, Yuichi Hiroi, and Yuta ItohIn 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Oct 2022